@inproceedings{10.1145/2598510.2598518,

author = {Li, Jiannan and Greenberg, Saul and Sharlin, Ehud and Jorge, Joaquim},

title = {Interactive Two-Sided Transparent Displays: Designing for Collaboration},

year = {2014},

isbn = {9781450329026},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/2598510.2598518},

doi = {10.1145/2598510.2598518},

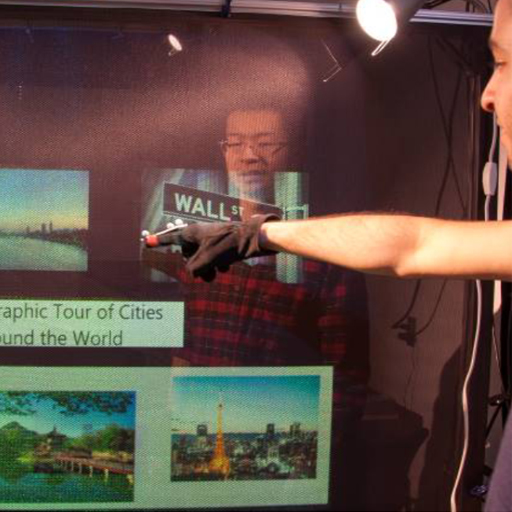

abstract = {Transparent displays can serve as an important collaborative medium supporting face-to-face

interactions over a shared visual work surface. Such displays enhance workspace awareness:

when a person is working on one side of a transparent display, the person on the other

side can see the other's body, hand gestures, gaze and what he or she is actually

manipulating on the shared screen. Even so, we argue that designing such transparent

displays must go beyond current offerings if it is to support collaboration. First,

both sides of the display must accept interactive input, preferably by at least touch

and / or pen, as that affords the ability for either person to directly interact with

the workspace items. Second, and more controversially, both sides of the display must

be able to present different content, albeit selectively. Third (and related to the

second point), because screen contents and lighting can partially obscure what can

be seen through the surface, the display should visually enhance the actions of the

person on the other side to better support workspace awareness. We describe our prototype

FACINGBOARD-2 system, where we concentrate on how its design supports these three

collaborative requirements.},

booktitle = {Proceedings of the 2014 Conference on Designing Interactive Systems},

pages = {395–404},

numpages = {10},

keywords = {workspace awareness, two-sided transparent displays, collaborative systems},

location = {Vancouver, BC, Canada},

series = {DIS '14}

}