As a Research Scientist at Adobe Research, I build emerging video and audio production tools with generative AI, with the goal of empowering us (humans) to create art and tell stories.

I received my Ph.D. and M.Sc. in Computer Science from the University of Toronto and my B.Sc. in Computer Science from National Taiwan University.

Publications

*I primarily publish system HCI work at top-tier venues (CHI, UIST, IUI), with two papers among the top 6 highest cited UIST papers published in the past 5 years.

Filter by venue:

CHI 2026

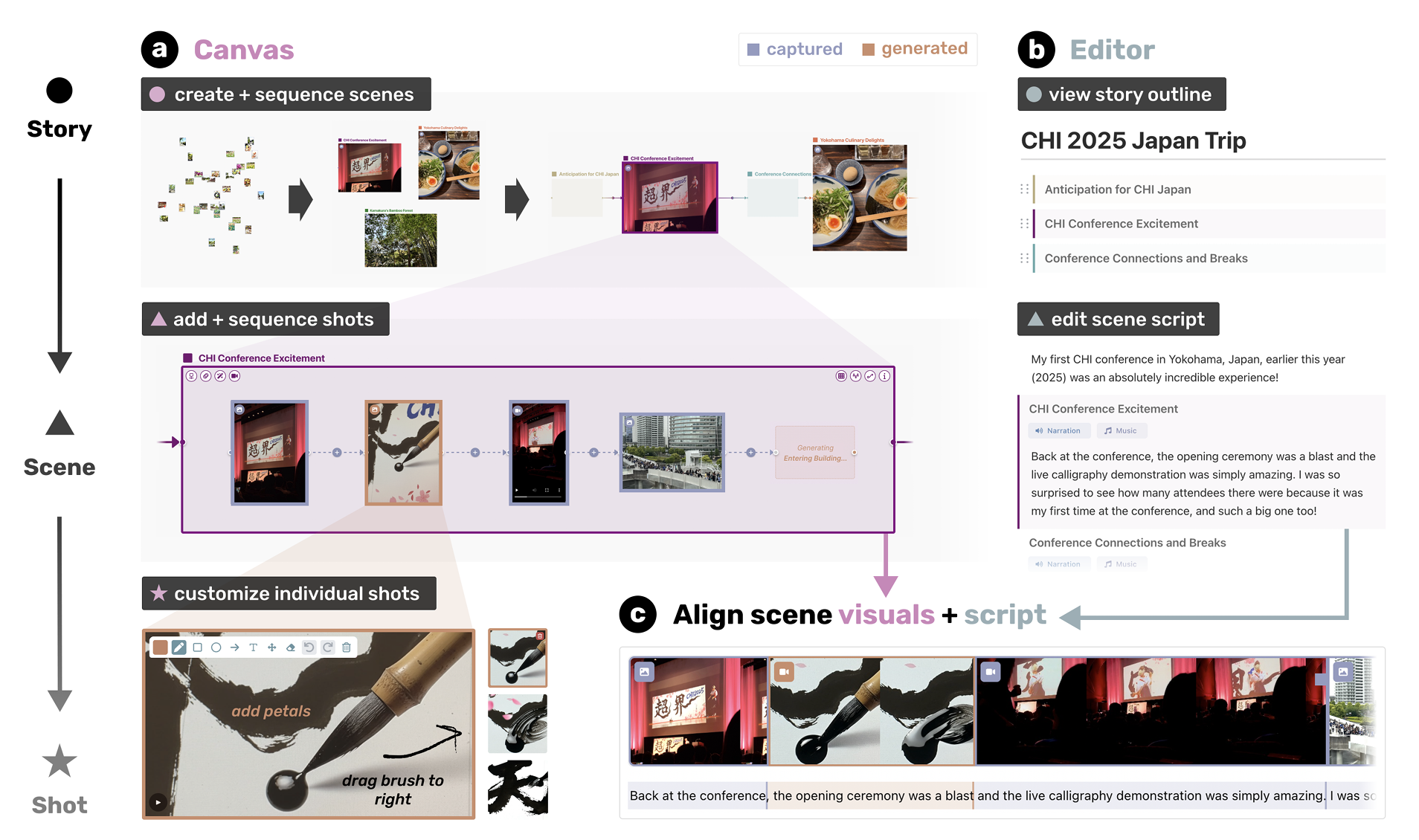

Vidmento: Creating Video Stories Through Context-Aware Expansion With Generative Video

Catherine Yeh, Anh Truong, Mira Dontcheva, Bryan Wang

CHI 2026

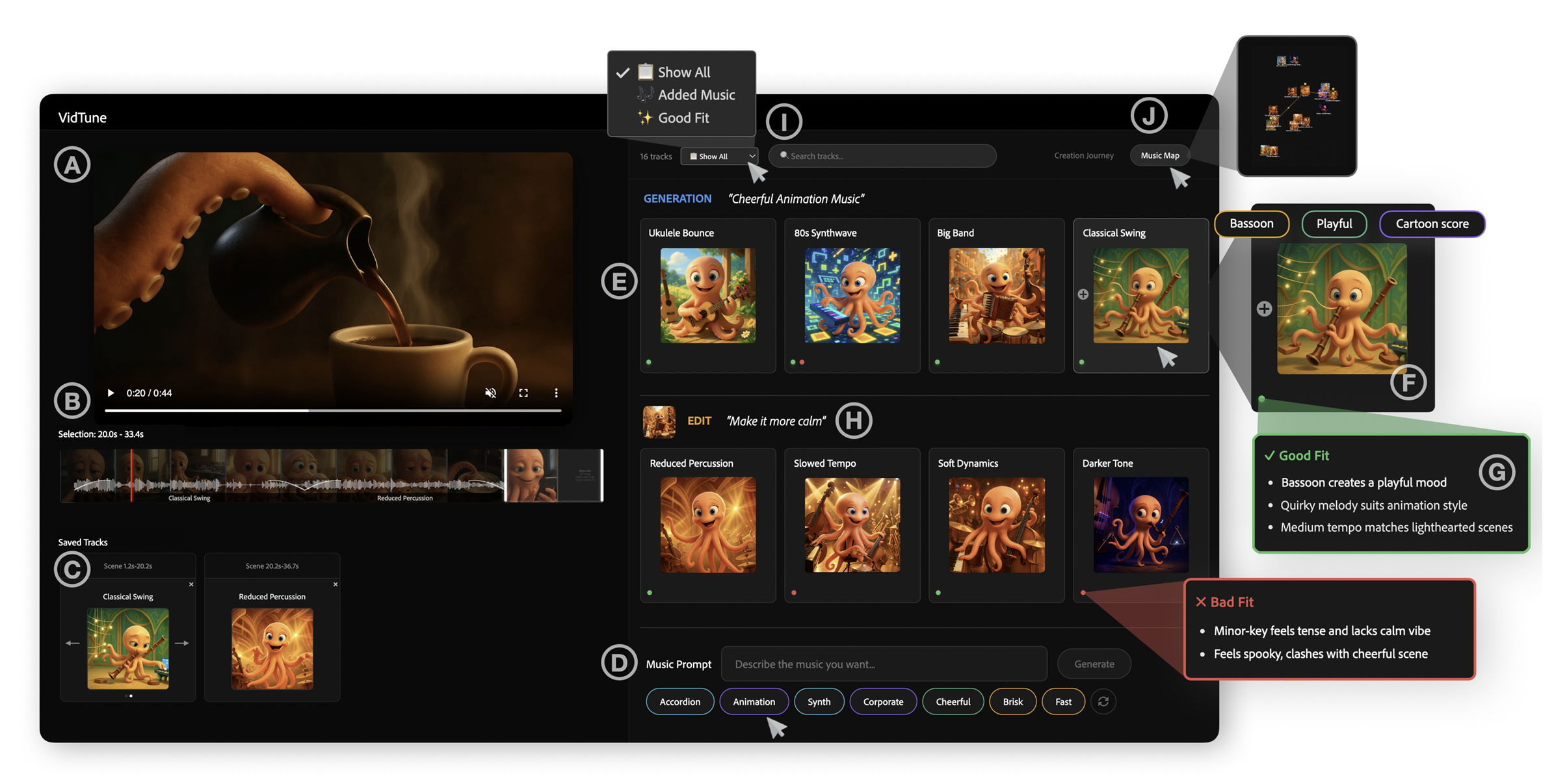

VidTune: Creating Video Soundtracks with Generative Music and Contextual Thumbnails

Mina Huh, Ailie C. Fraser, Dingzeyu Li, Mira Dontcheva, Bryan Wang

IUI 2026

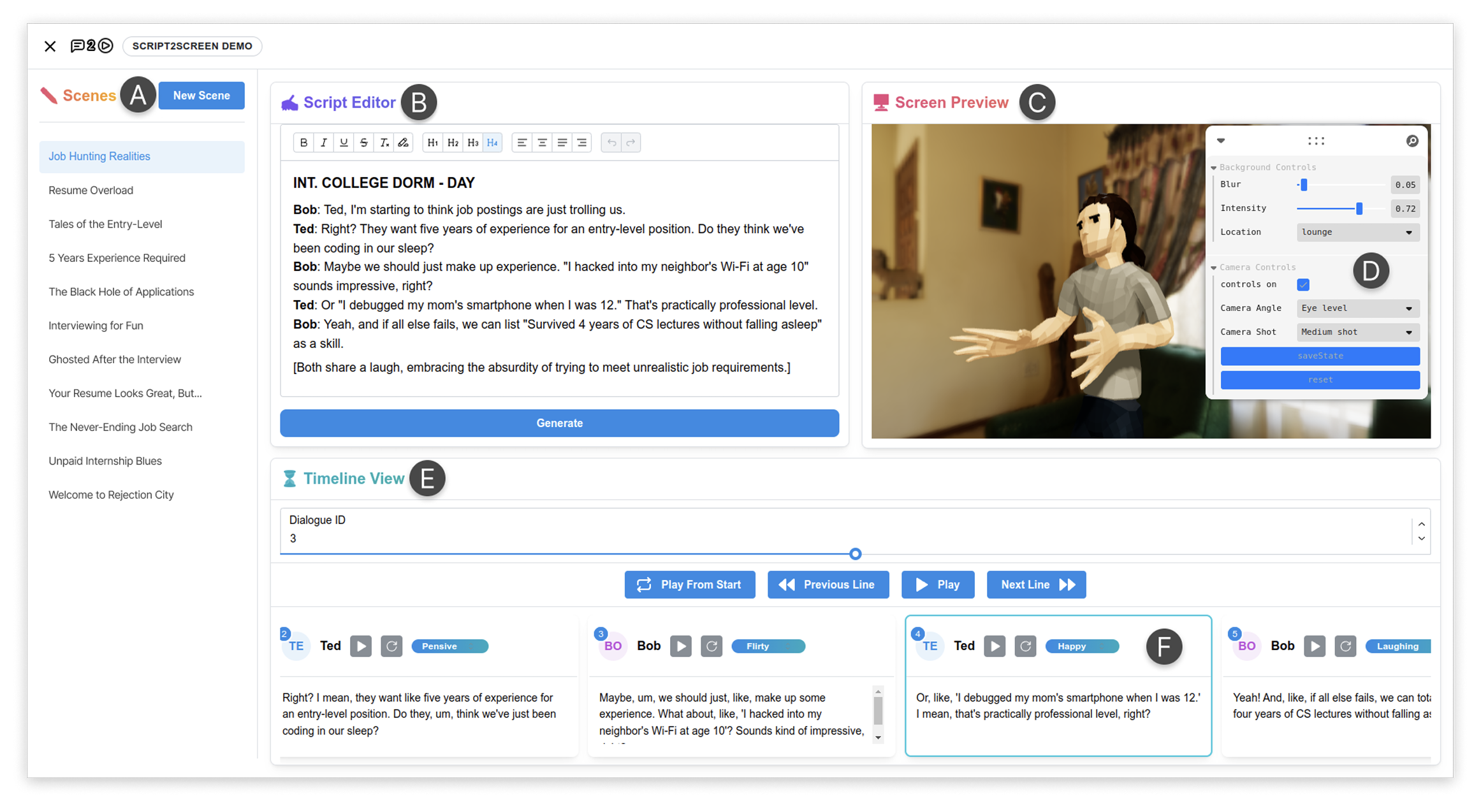

Script2Screen: Supporting Dialogue Scriptwriting with Interactive Audiovisual Generation

Zhecheng Wang, Jiaju Ma, Eitan Grinspun, Tovi Grossman, Bryan Wang

CHI 2025

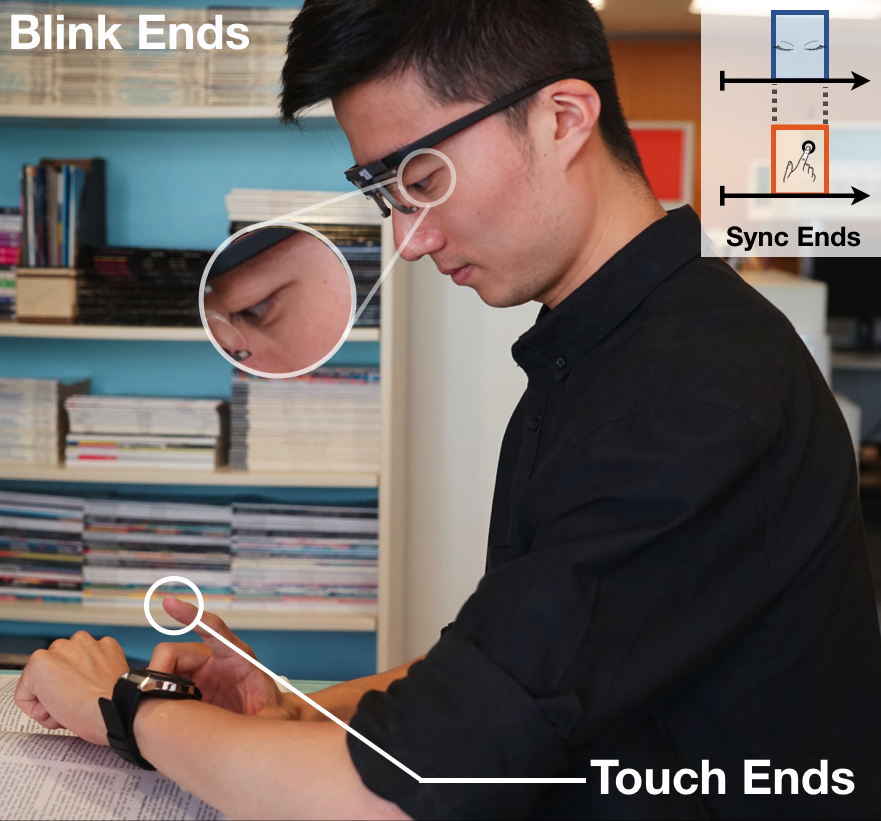

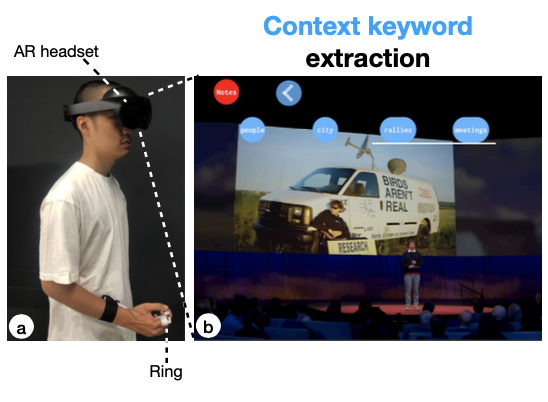

GazeNoter: Co-Piloted AR Note-Taking via Gaze Selection of LLM Suggestions to Match Users' Intentions

Hsin-Ruey Tsai, Shih-Kang Chiu, Bryan Wang

arXiv 2025

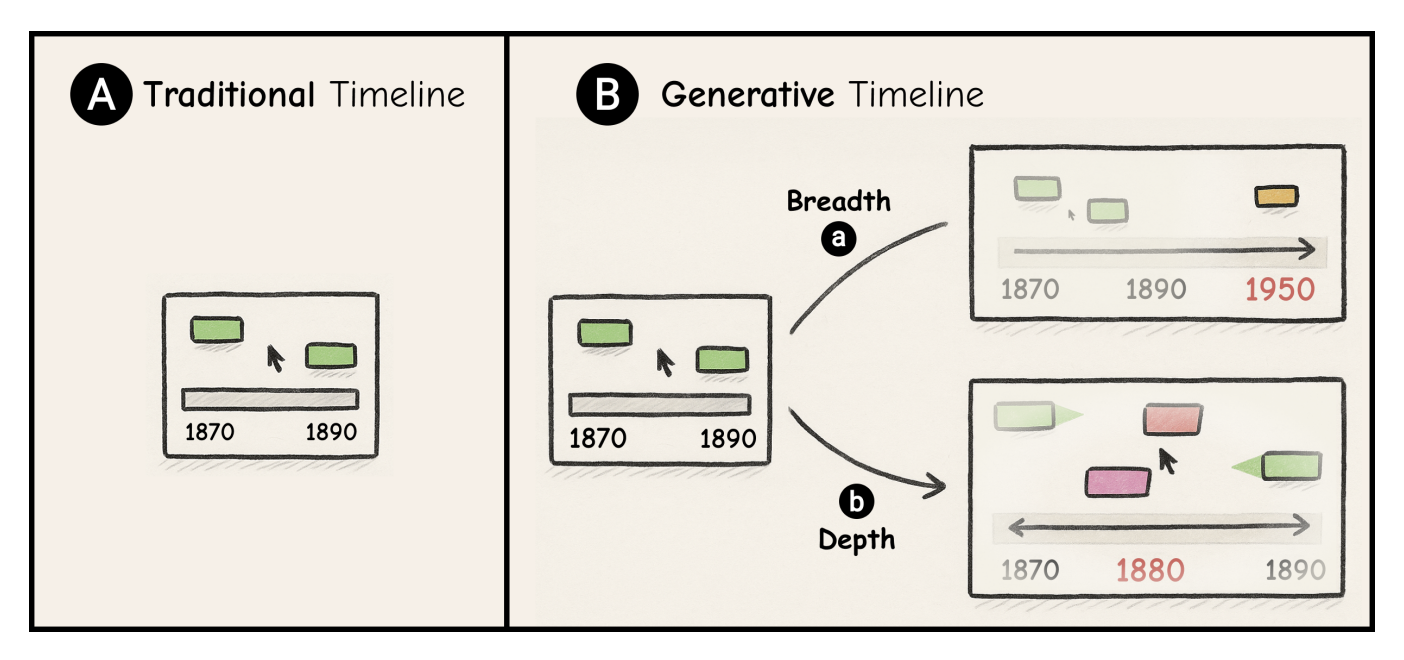

KnowledgeTrail: Generative Timeline for Exploration and Sensemaking of Historical Events and Knowledge Formation

Sangho Suh, Rahul Hingorani, Bryan Wang, Tovi Grossman

Thesis 2025

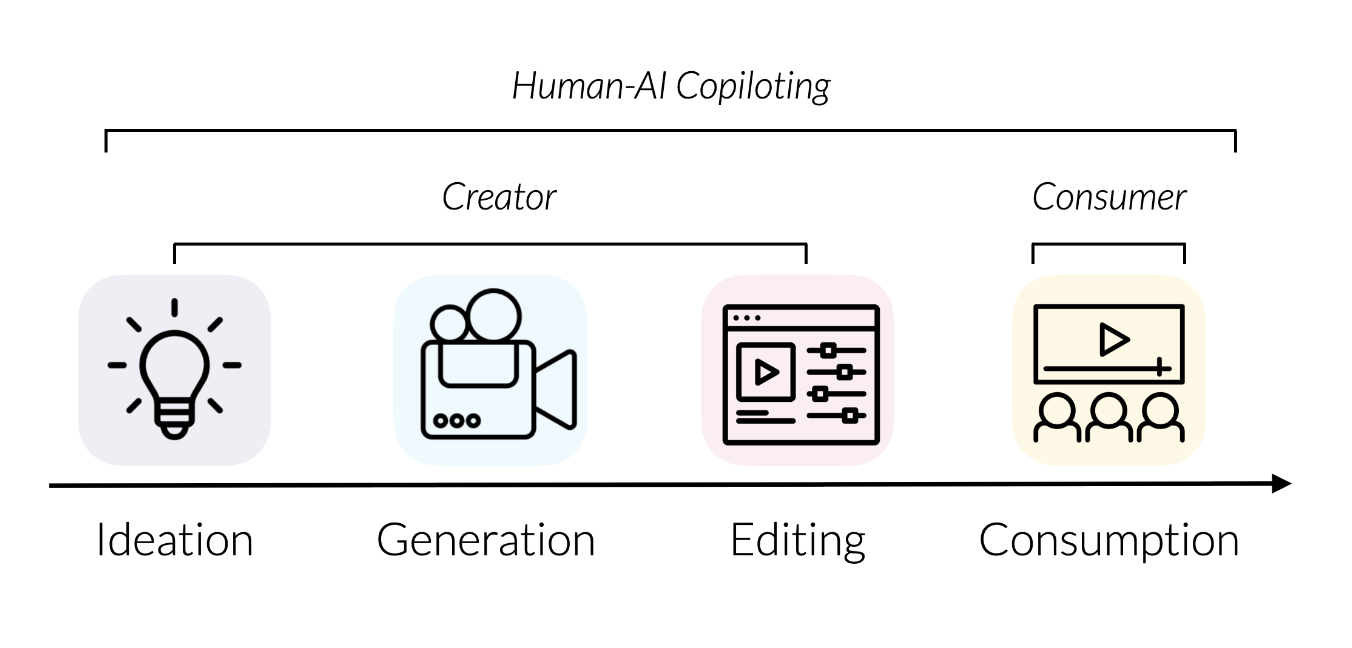

Human-AI Systems for Creating, Consuming, and Interacting with Digital Content

Bryan Wang

arXiv 2024

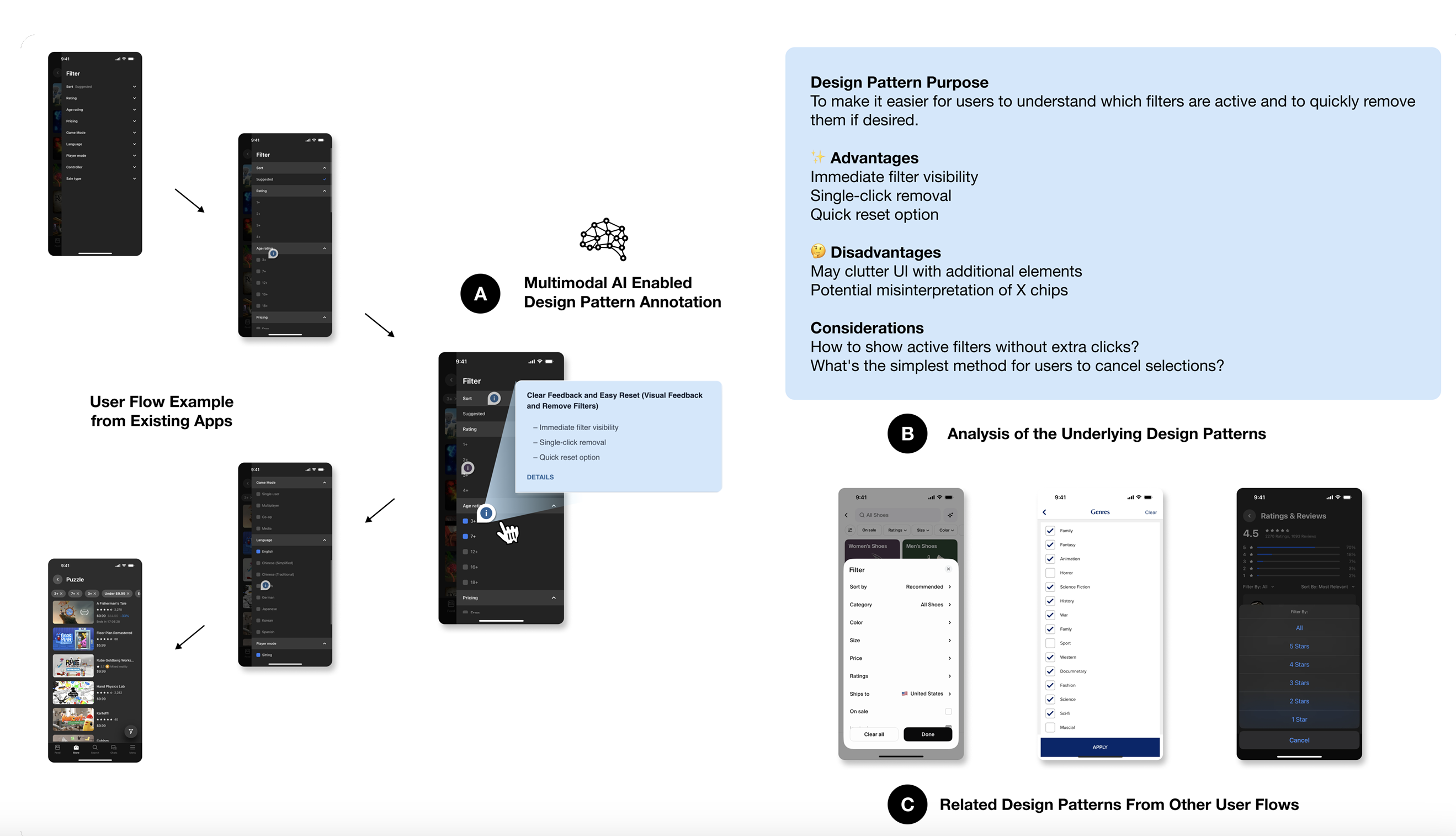

Flowy: Supporting UX Design Decisions Through AI-Driven Pattern Annotation in Multi-Screen User Flows

Yuwen Lu, Ziang Tong, Qinyi Zhao, Yewon Oh, Bryan Wang, Toby Jia-Jun Li

EMNLP 2023

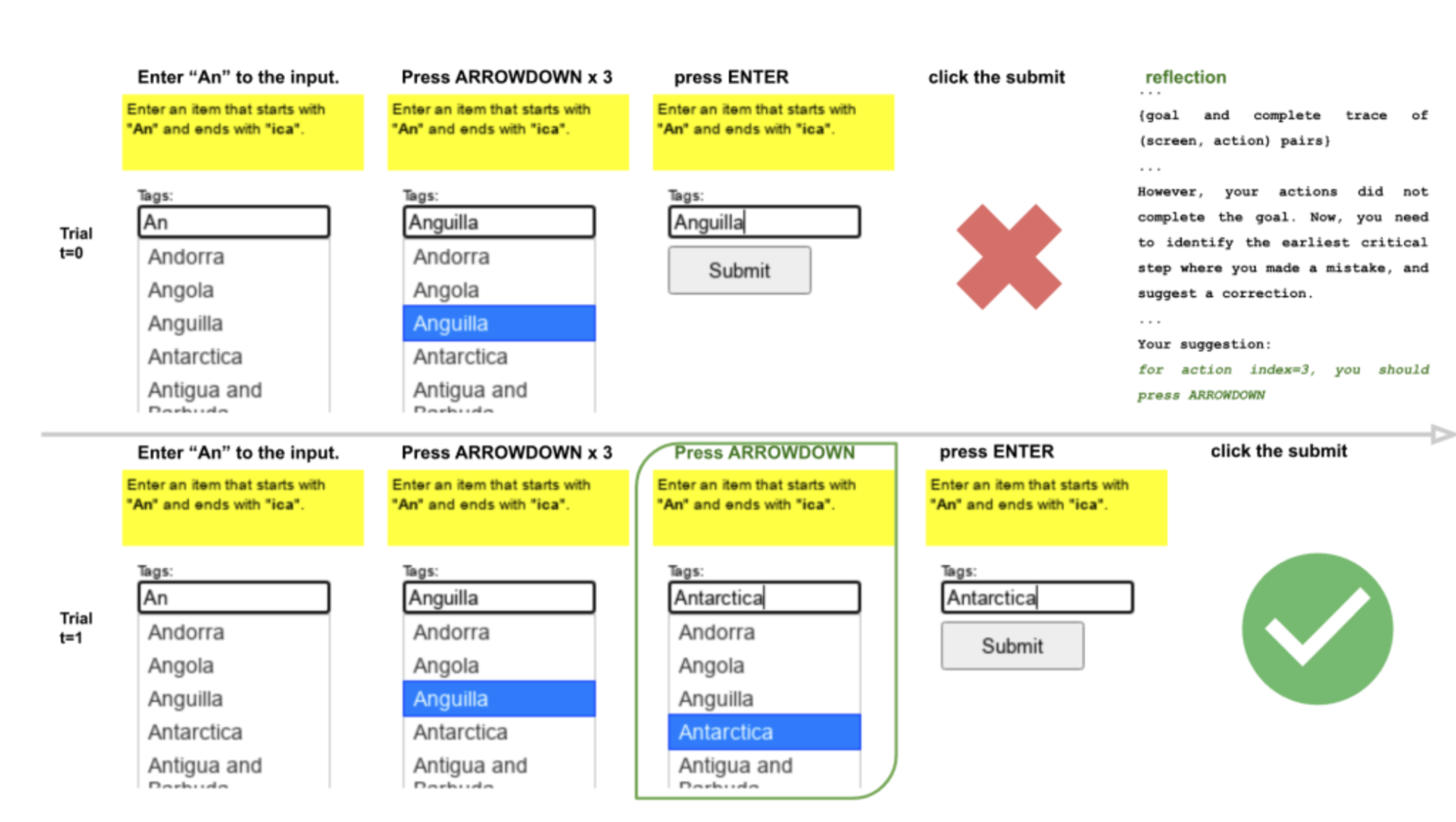

A Zero-Shot Language Agent for Computer Control with Structured Reflection

Tao Li, Gang Li, Zhiwei Deng, Bryan Wang, Yang Li

UIST 2023

Democratizing Content Creation and Consumption through Human-AI Copilot Systems

Bryan Wang

Experience

Research Intern — Meta Reality Labs - Research

Host(s): Raj Sodhi

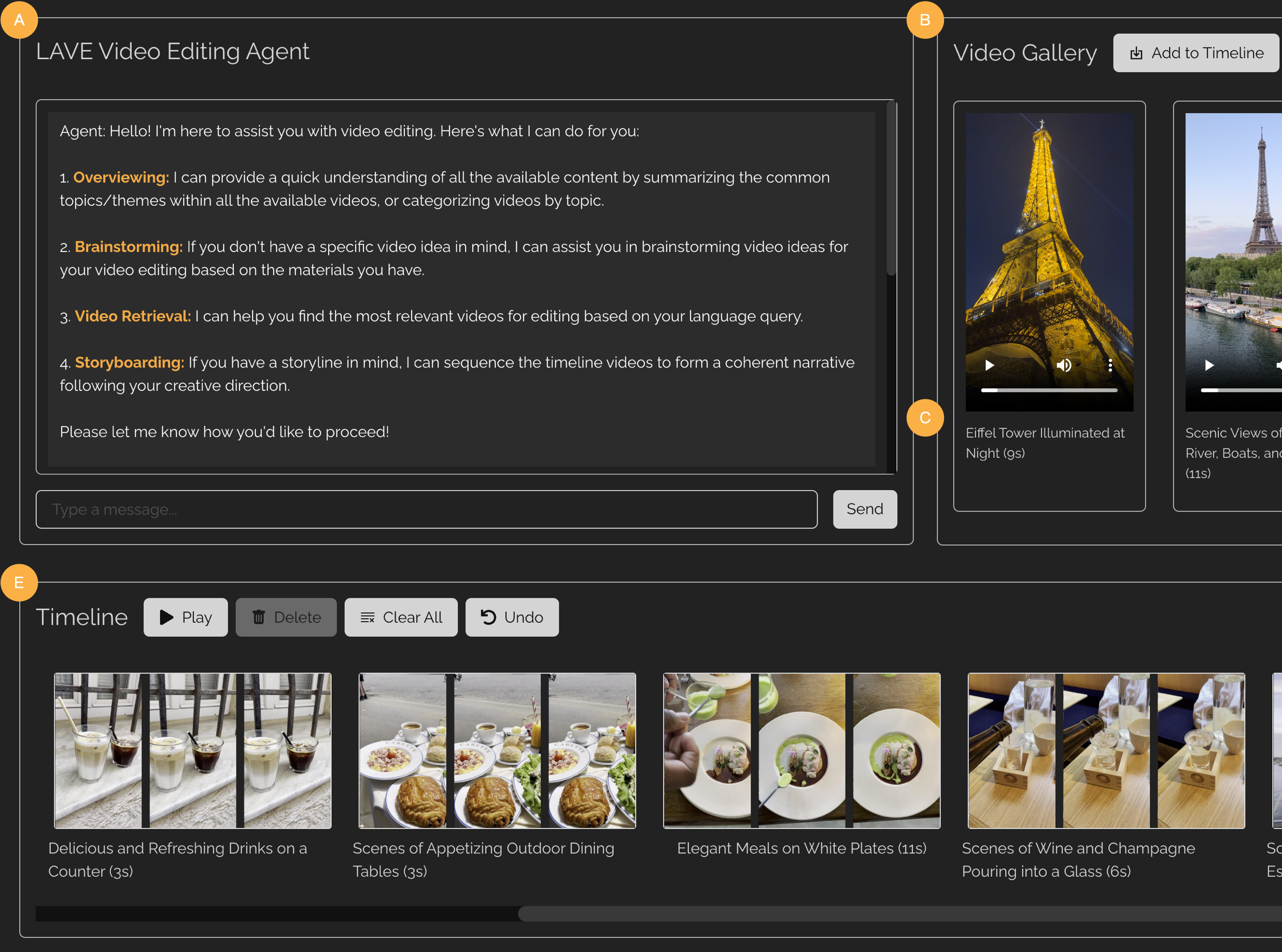

Developed LAVE, a video editing tool with LLM-powered agent assistance. Published at IUI 2024.

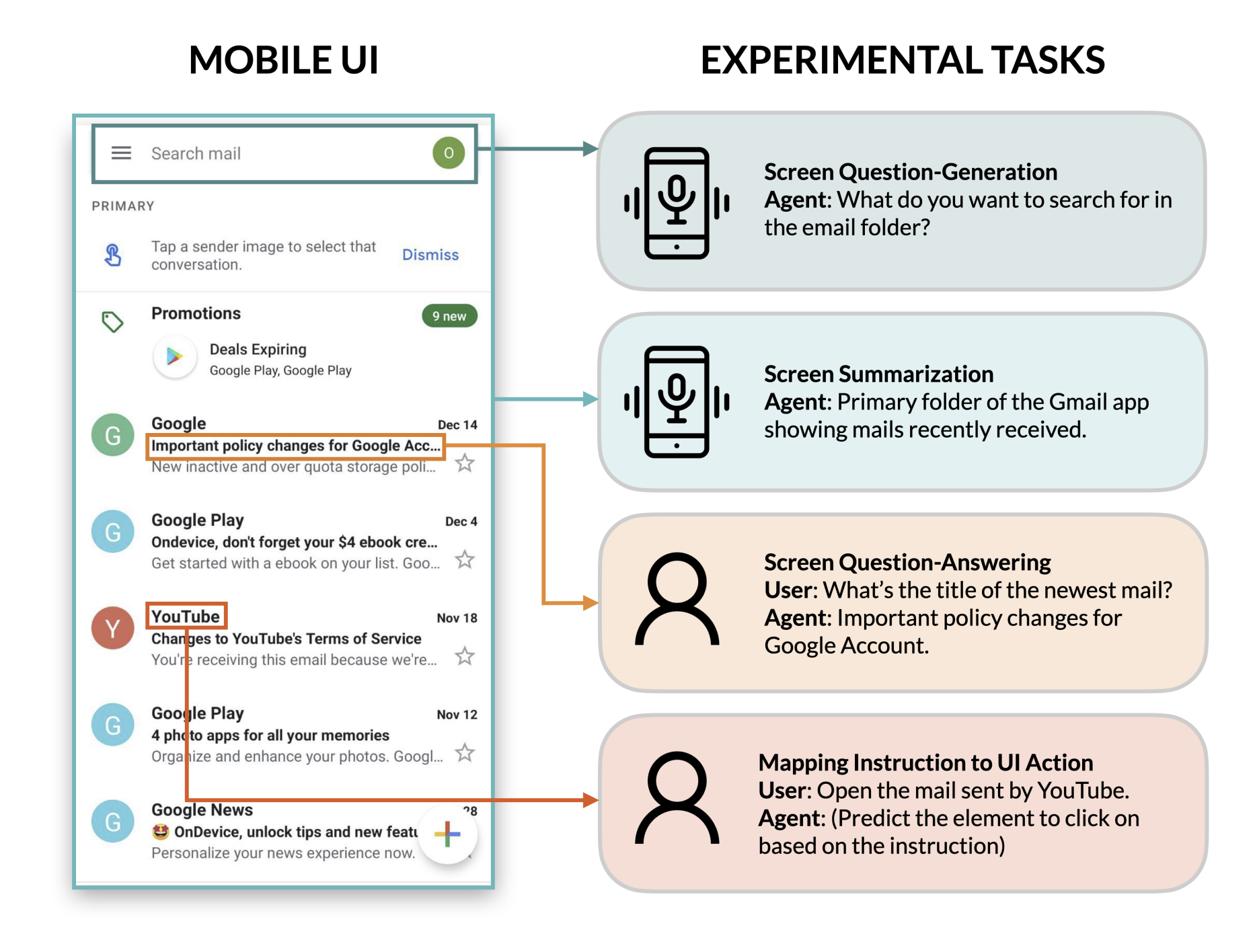

Student Researcher — Google Research

Host(s): Yang Li and Gang Li

Designed prompting techniques for conversational mobile UI interaction using LLMs. Published at CHI 2023.

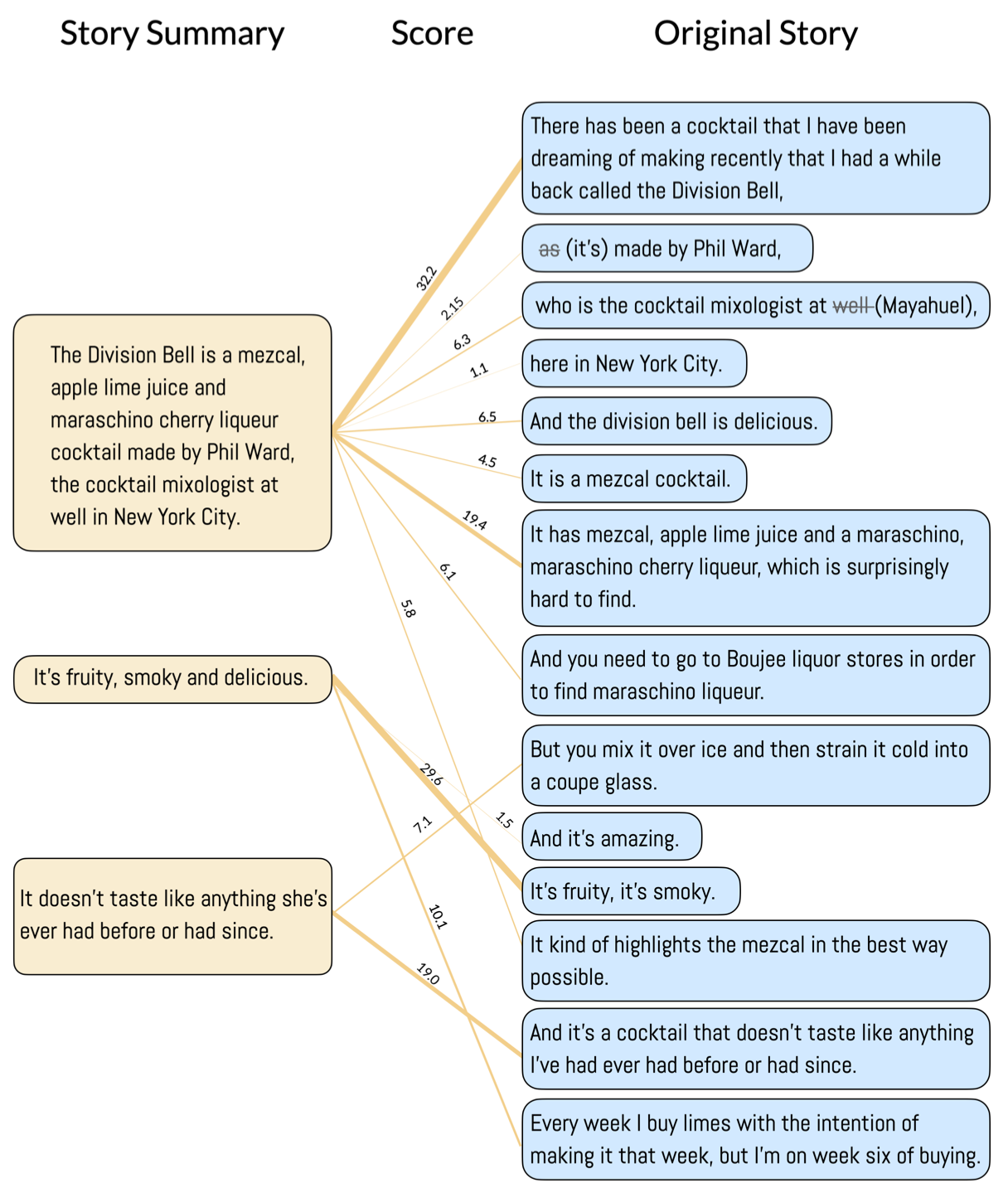

Research Intern — Adobe Research

Host(s): Gautham J. Mysore and Zeyu Jin

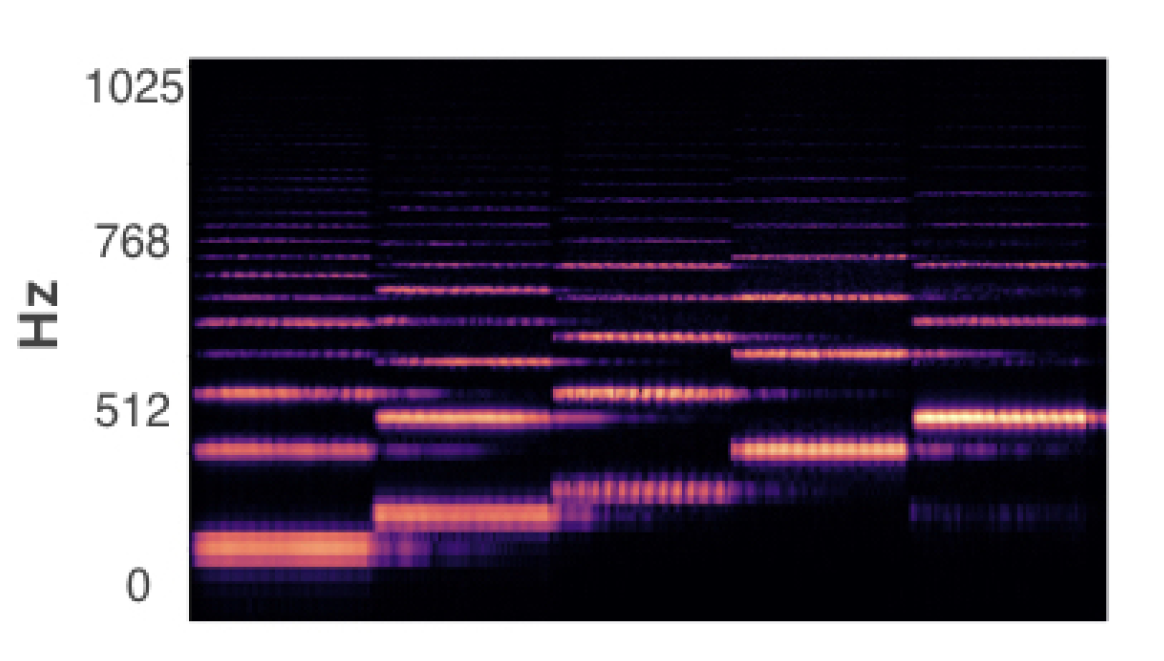

Developed ROPE, an audio story editing tool for automatically shortening voice recordings using NLP. Published at UIST 2022

Research Intern — Google Research

Host(s): Yang Li and Gang Li

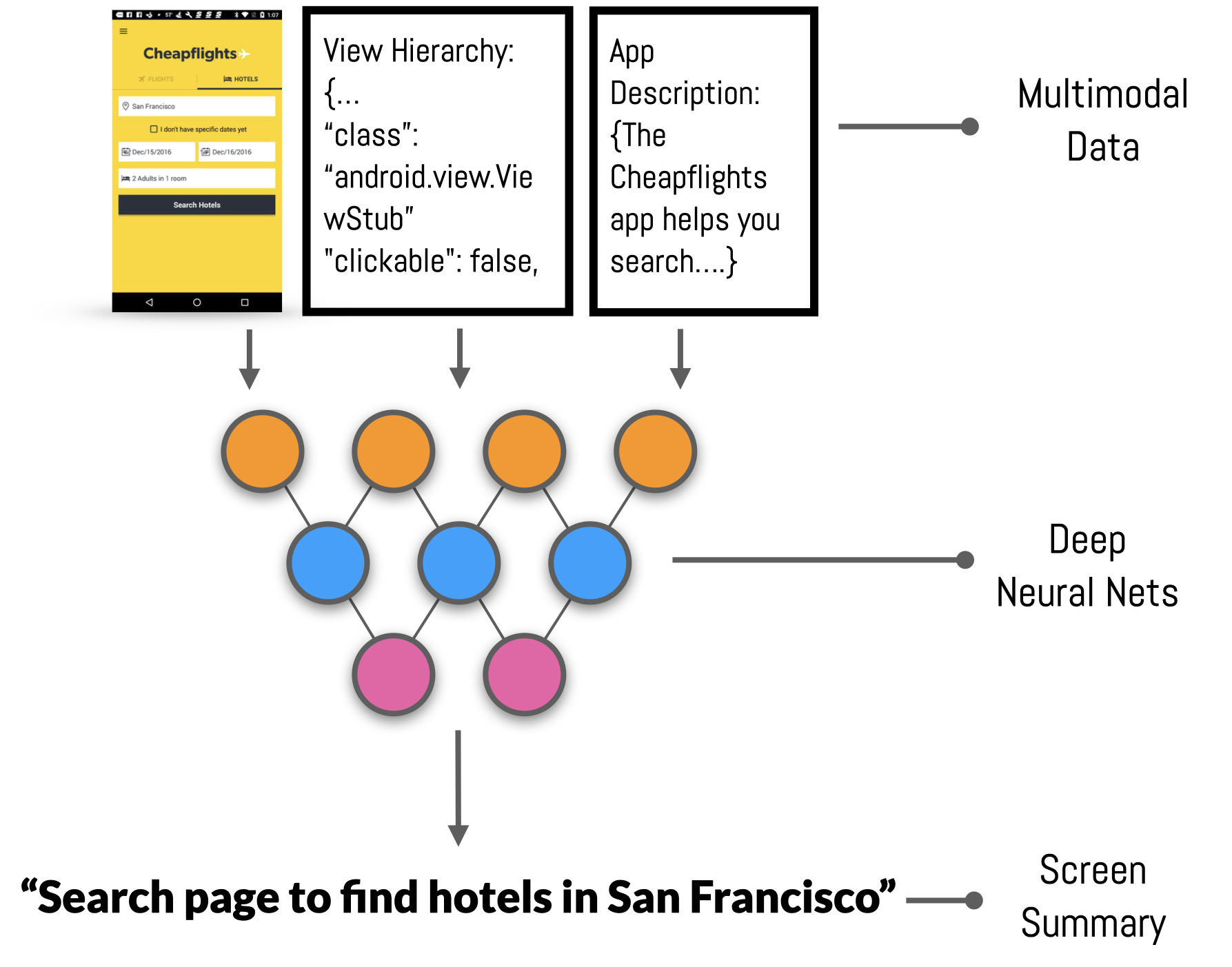

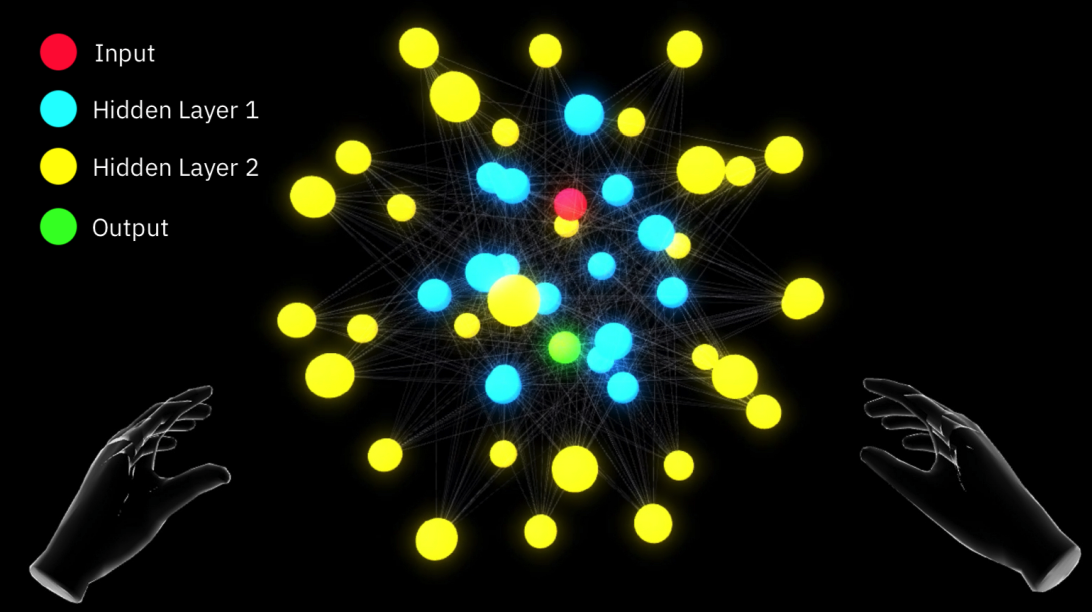

Created large-scale UI-language dataset (110k+ summaries, 22k+ UIs) and trained multimodal networks for screen summarization. Published at UIST 2021.

Press

News

Feb. 2026