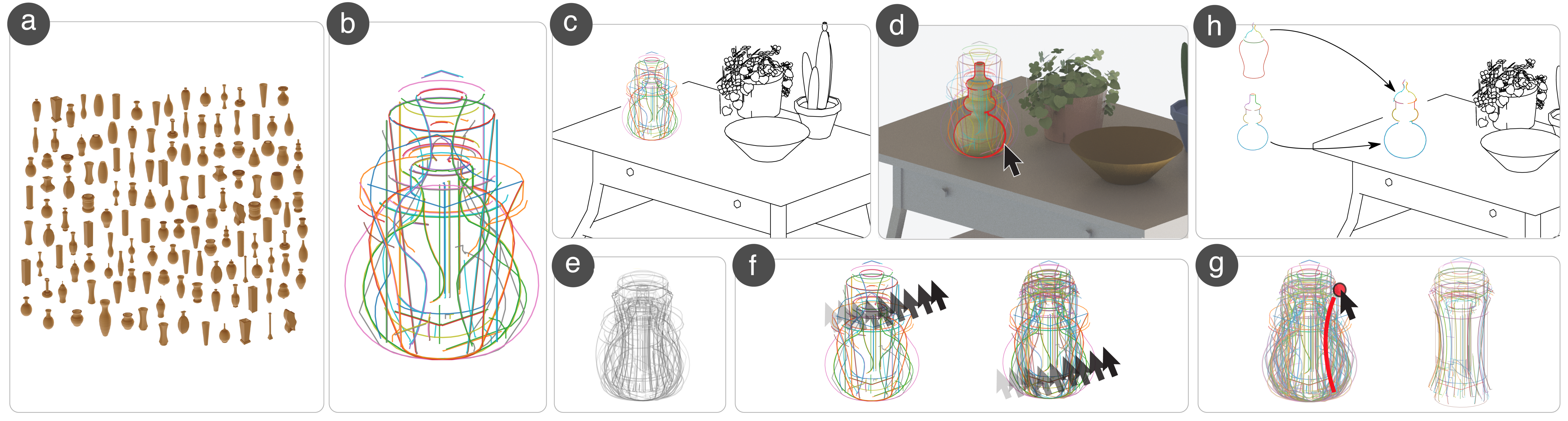

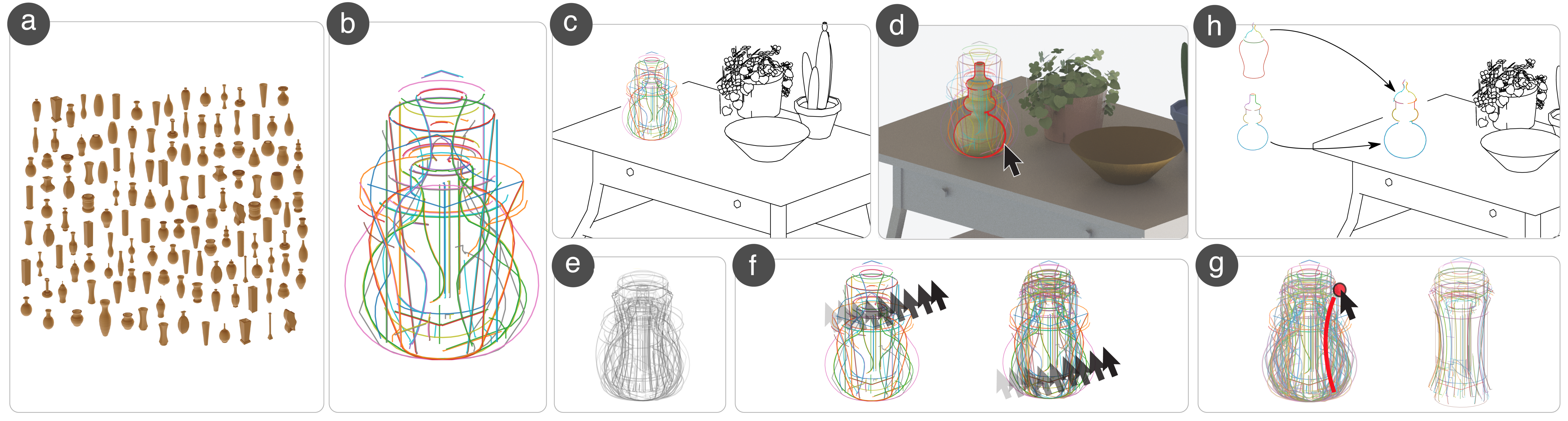

We present juxtaform, a novel approach to the interactive summarization of large shape collections for conceptual shape design. We conduct a for mative study to ascertain design goals for creative shape exploration tools. Motivated by a mathematical formulation of these design goals, juxtaform integrates the exploration, analysis, selection, and refinement of large shape collections to support an interactive divergence-convergence shape design workflow. We exploit sparse, segmented sketch-stroke visual abstractions of shape and a novel visual summarization algorithm to balance the needs of shape understanding, in-situ shape juxtaposition, and visual clutter. Our evaluation is three-fold: we show that existing shape and stroke clustering algorithms do not address our design goals compared to our proposed shape corpus summarization algorithm; we compare juxtaform against a structured image gallery interface for various shape design and analysis tasks; and we present multiple compelling 2D/3D applications using juxtaform.

Our research is funded in part by NSERC. We would also like to thank Disha Tripathy for early discussions, feedback, and help with figures, Zhecheng Wang and Selena Ling for their help with the video submission and figures, the participants of our user studies for their time and input, and the anonymous reviewers for their valuable feedback.