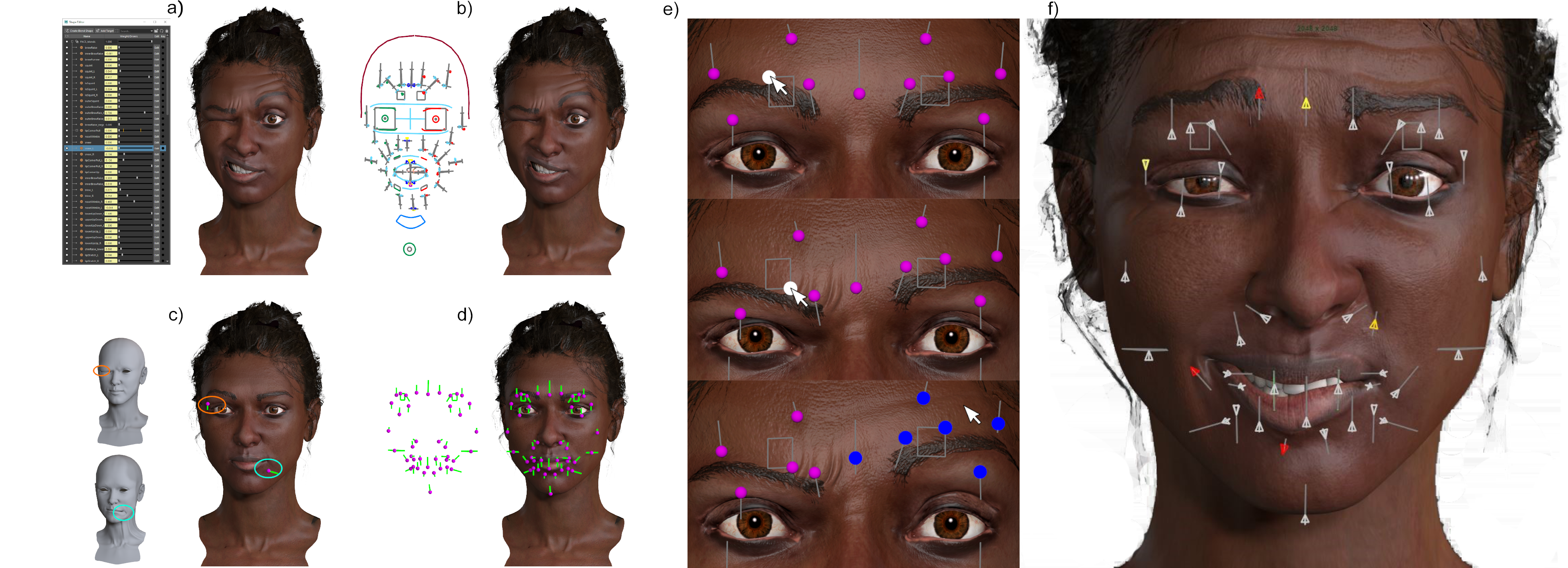

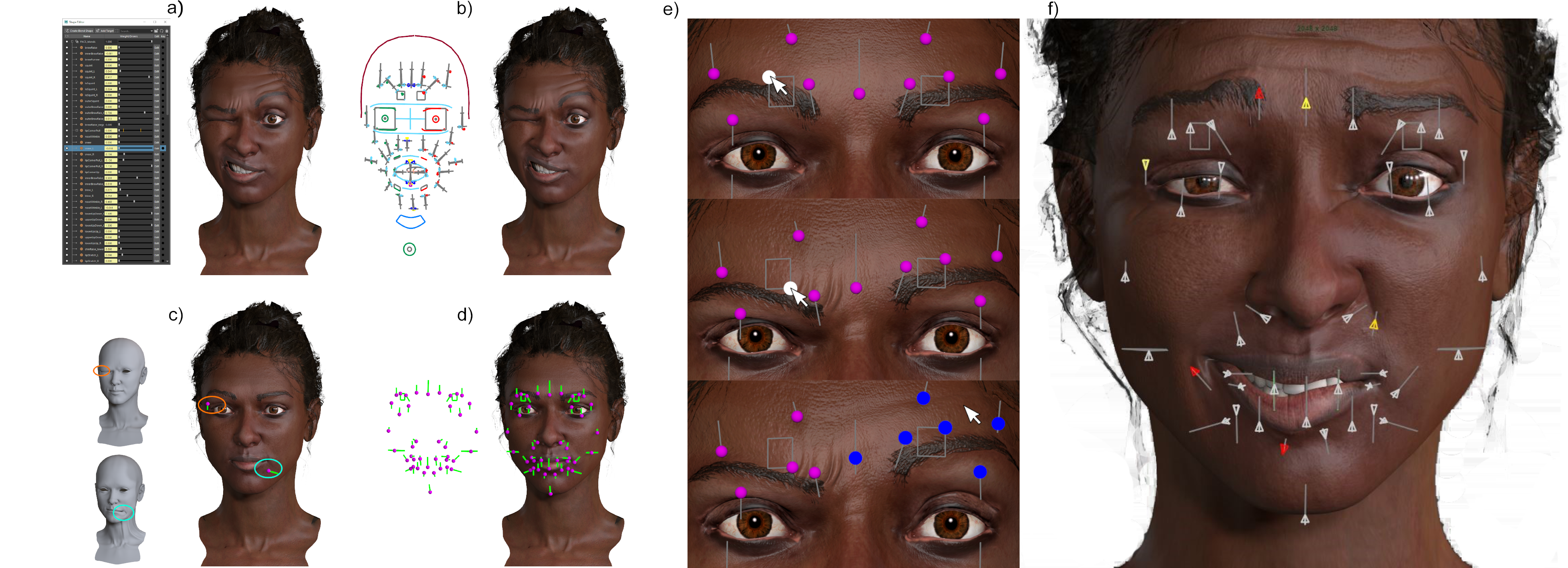

Complex deformable face-rigs have many independent parameters that control the shape of the object. A human face has upwards of 50 parameters (FACS Action Units), making conventional UI controls hard to find and operate. Animators address this problem by tediously hand-crafting in-situ layouts of UI controls that serve as visual deformation proxies, and facilitate rapid shape exploration. We propose the automatic creation of such in-situ UI control layouts. We distill the design choices made by animators into mathematical objectives that we optimize as the solution to an integer quadratic programming problem. Our evaluation is three-fold: we show the impact of our design principles on the resulting layouts; we show automated UI layouts for complex and diverse face rigs, comparable to animator hand-crafted layouts; and we conduct a user study showing our UI layout to be an effective approach to face-rig manipulation, preferable to a baseline slider interface.

Animation by Chris Landreth

@article{Kim:FaceRigUI:2021,

title = {Optimizing UI Layouts for Deformable Face-Rig Manipulation},

author = {Joonho Kim and Karan Singh},

url = {https://doi.org/10.1145/3450626.3459842}, DOI={10.1145/3450626.3459842},

journal = {ACM Transactions on Graphics},

year = {2021},

}

We would like to thank Chris Landreth (JALI Research) and Steve Cullingford (Weta Digital) for providing us with facial rigs, animations, and invaluable feedback. We are also grateful to our user study participants, and to Metahuman (Epic Games), CGTarian, Antony Ward (3D World), CITE LAB, Eisko, Mery Project, and Chad Vernon for face rigs. This research was supported by NSERC.