Using Facial Motion Capture to Drive

an Animated Character

This is an research project that started as a course project for a Character Animation course (CSC 2529) taught by Karan Singh.

The animation industry is relying more and more on motion capture data to drive character animation. Although in practice this has worked well for articulated motion (for example, the human skeleton), there are inherent problems in capturing motion on the human face. Facial motion capture systems can accurately track a large number of points on the face, but they fail to capture skin creases which are crucial for the communication of facial expression. The facial configuration is therefore interpolated from the motion capture data, often resulting in a "smoothed-out" face.

On the other hand, facial models created for hand animation usually have the skin creases built directly into the parameterization of the model. As the parameter values change, deformations are applied to the face model, generating skin creases depending on the desired facial configuration.

With this project, we attempt to capture the best of both of these worlds. Our approach is to use a complex facial rig and previously animated dialog (both from the short film Ryan) in conjunction with motion capture data to train a learning system to generate the parameter values for the rig from the data. This system can then provide optimal animation parameters for new motion data, providing all the facial detail contained in the rig. This approach avoids interpolating the shape of the facial surface to the motion capture data, thus avoiding smooth surface problems.

Update: The inspiration for this project, at the time (Jan. 2005), came from the movies The Polar Express and The Lord of the Rings trilogy. In the latter, the character Gollum was motion-captured, but his face was animated solely by hand. In the former case, all of the characters where played by Tom Hanks, and therefore many of them all look like him. It was my goal to break this captured-subject look. You can read more about these issues here.

Our approach is to use machine learning techniques to learn a mapping between the motion capture data and the rig parameters of the character. Previous approaches have used radial basis function (RBF) interpolation to map the surface describing the character to the configuration established by motion capture markers. See, for example, Frédéric Pighin's work in this repository or the Facial Animation and Modeling Group at the Max Plank Institute.

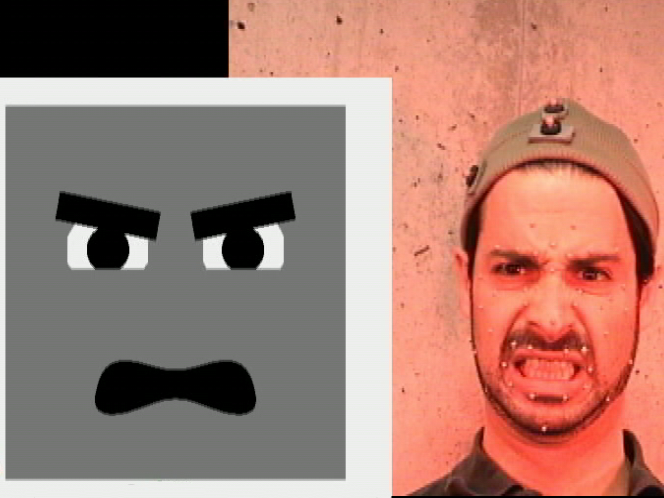

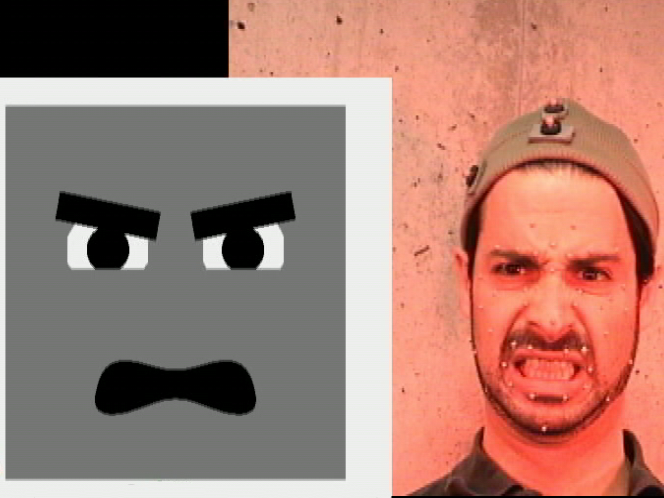

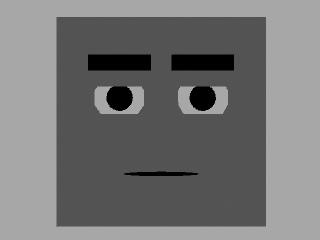

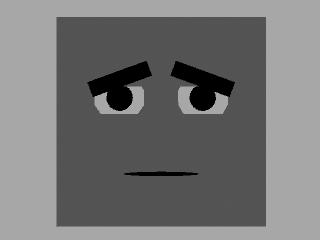

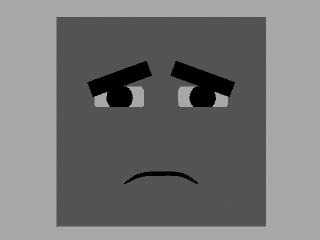

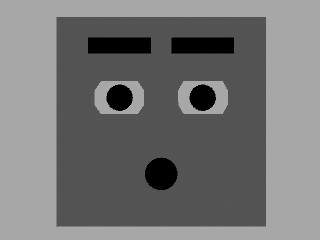

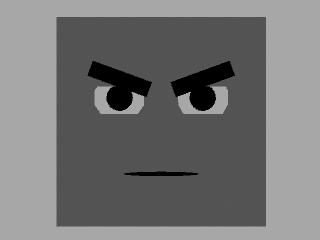

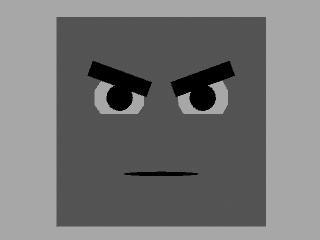

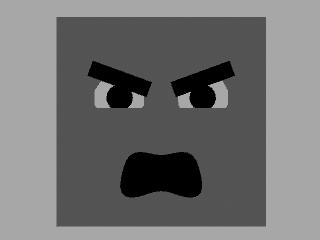

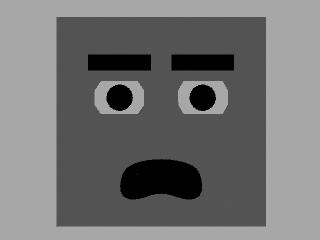

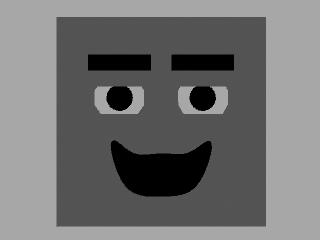

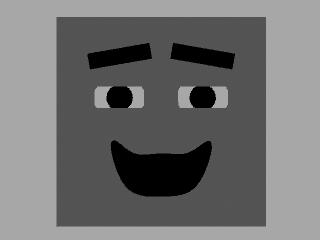

To create a training database of data, we recorded an actor (yours truly) making some interesting facial expressions, attempting to cover all the expressive muscle groups in the face. We simultaneously recorded facial motion capture data from the actor's face and a frontal video shot. The video was then used to animate Boxy, a box-like character presented in this book. He is a very basic character, but capable of a wide range of expression, as shown below.

Technical details: We recorded digital video (DV) at 30 fps. The motion capture data was recorded at 120Hz using 5 Vicon cameras. Forty 3mm markers were placed on the actor, and 3 additional markers were worn on the hat to capture rigid motions of the head. The Vicon cameras record data in the visible, red spectrum, which is why the actor looks red in the videos and images.

To create the animation, I used Adobe Premiere to visualize the video frame by frame and place keyframes on the appropriate controls in Autodesk Maya (Alias Maya at the time).

Neutral |

||

Sad |

Sadder |

Surprised |

Angry |

Angrier |

Angriest |

Afraid |

Happy |

Happier |

The training data video can be found here (5.6MB).

What we are really looking for here is a function that maps the from the motion capture data to the character rig parameters. Let m be the vector of motion capture locations, which has dimension 120 = 40 markers x 3 spatial dimensions, and let p be the vector of rig parameters (10 for Boxy). Then we are looking for a function F that satisfies

p = F(m).

Now, our intuition tells us that when the actor's eyebrow moves up, so should the eyebrow of the character. They should both move in about the same proportion along the face, which leads us to assume a linear function will work well. Thus, we make F a matrix rather than a general function.

There are two issues with this formulation. The first is the high dimensionality of the input compared to the output. Luckily, the motion of the markers on the face is highly correlated, so we can use a dimensionality reduction technique such as principal component analysis (PCA) to eliminate some of the noise.

And speaking of noise brings us to our second issue. When we consider how we want to generate output for our character, we must consider the quality of the input. Now, if a character is animated, we know the animation curves will be smooth. However, motion capture data is typically very noisy, and this is very obvious from one frame to the next. Therefore, if we provide noisy inputs to our linear function, we will obtain noisy outputs in return. We could filter the input data to smooth it out, but we might remove some high frequency components which are important for the motion.

Instead, we are going to change our model to model the error and the time dependence of the data. The error is taken care of by assuming a generative model--that is, a model which generates the inputs from the outputs, modified by some noise ε.

m = Fp + ε

Next, we assume that the value of the rig parameters also evolve linearly, but have some noise corrupting them as well.

pt+1 = Apt + μ

We have thus arrived at the well known linear dynamical system model.

pt+1 = Apt + μ

mt = Fpt + ε

Using the training data, we can compute values for the matrices A and F. Then, when we have new motion capture data, we can generate the rig parameters using a Kalman filter, which computes the optimal values of p.

The following videos are all generating data from this motion capture session. A funny thing happened when we recorded it. The camera for some reason jumped into demo mode, so some of the special video effects available in the camera are displayed here. The last half of the video is fine.

Here are some of the results I generated using the naive approach. I used 3 different functions for F(p): k-nearest neighbor interpolation, polynomial function, and a neural network. The resulting, low quality motion is shown below.

| k-NN | Polynomial | Neural net |

The final animation using the improved, linear dynamical model is here.

Note how this video is much less noisy than the previous ones. In fact, Boxy's movements strike me as somewhat too smooth. Additionally, there are still some problems with the mouth closing in over itself and the eyes never fully close.

Looking back on this project with 20/20 vision given by the work in this area done since, I how some of the problems I ran into could be solved somewhat differently. One of the biggest issues was getting good animation data. Boxy is a very simple character, and we were hoping for something more complex. However, asking an animator to animate to match a video is not only labor intensive and time consuming but also very boring. Therefore, an approach which only requires matching a small number of poses would be more useful. One such system was built by Erika Chuang (link).

I also think that the linear dynamics model of the face is somewhat limited. When a person changes expression, the forces on the skin of the face create new dynamics. This could be simulated better with a larger number of linear dynamical systems--in a sense, as a piecewise linear dynamics. Unfortunately, I never implemented this kind of model.

Finally, it is important to note that using a least squares error for this type of application is the best approach. In my experiments, I was able to use a Gaussian process to reduce the testing error for a generative regression model quite a bit. However, the motion in this case was very damped. Using a linear/polynomial model for generative regression gave higher testing errors, yet the motion of the character followed the actor's motion better due to the linear relationship. Therefore, a better energy/error term would have produced better results.