Exploratory Font Selection Using Crowdsourced Attributes |

| Peter O'Donovan1* Jānis Lībeks1* Aseem Agarwala2 Aaron Hertzmann1,2 |

| 1University of Toronto 2Adobe |

| *Both authors contributed equally |

|

|

|

Try the Interfaces:

Video:

Abstract

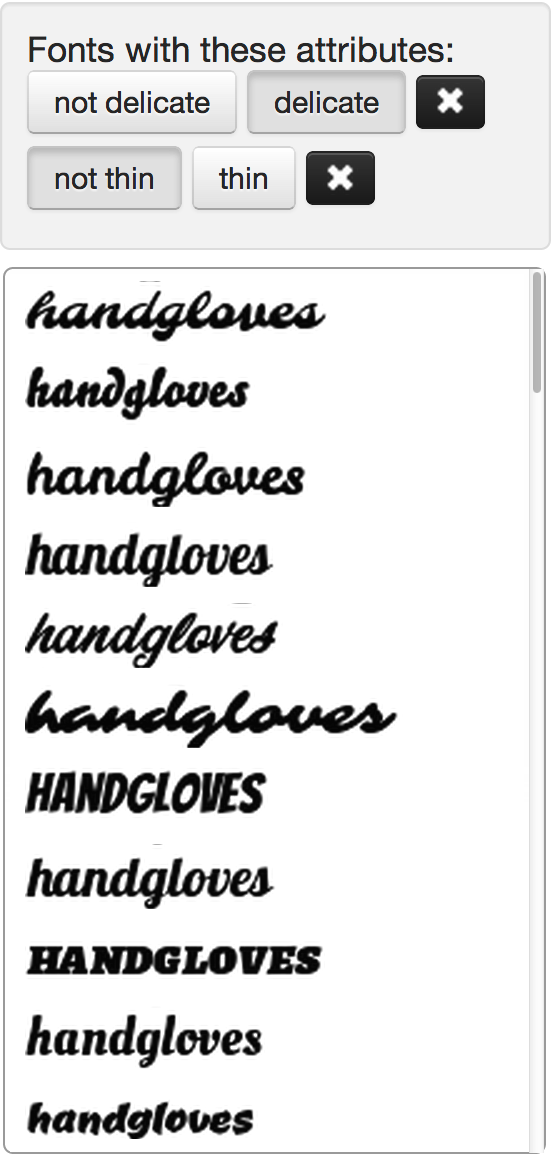

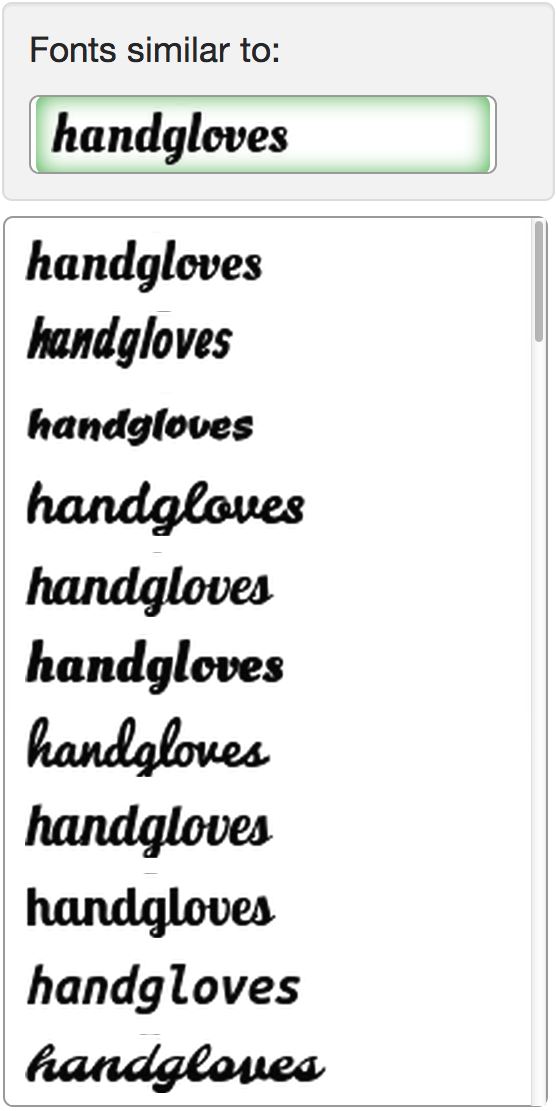

This paper presents interfaces for exploring large collections of fonts for design tasks. Existing interfaces typically list fonts in a long, alphabetically-sorted menu that can be challenging and frustrating to explore. We instead propose three interfaces for font selection. First, we organize fonts using high-level descriptive attributes, such as "dramatic" or "legible." Second, we organize fonts in a tree-based hierarchical menu based on perceptual similarity. Third, we display fonts that are most similar to a user's currently-selected font. These tools are complementary; a user may search for "graceful" fonts, select a reasonable one, and then refine the results from a list of fonts similar to the selection. To enable these tools, we use crowdsourcing to gather font attribute data, and then train models to predict attribute values for new fonts. We use attributes to help learn a font similarity metric using crowdsourced comparisons. We evaluate the interfaces against a conventional list interface and find that our interfaces are preferred to the baseline. Our interfaces also produce better results in two real-world tasks: finding the nearest match to a target font, and font selection for graphic designs.

Paper

Peter O'Donovan, Jānis Lībeks, Aseem Agarwala, Aaron Hertzmann. Exploratory Font Selection Using Crowdsourced Attributes ACM Transactions on Graphics, 2014, 33, 4, (Proc. SIGGRAPH). BibTexSupplemental Materials

Further Experiments and Study Details: supplemental.pdf

Google WebFonts used in our dataset: gwfonts.zip

These fonts were downloaded from https://www.google.com/fonts

Attribute Dataset:

attribute.zip

Similarity Dataset & Matlab code for metric learning:

similarity.zip