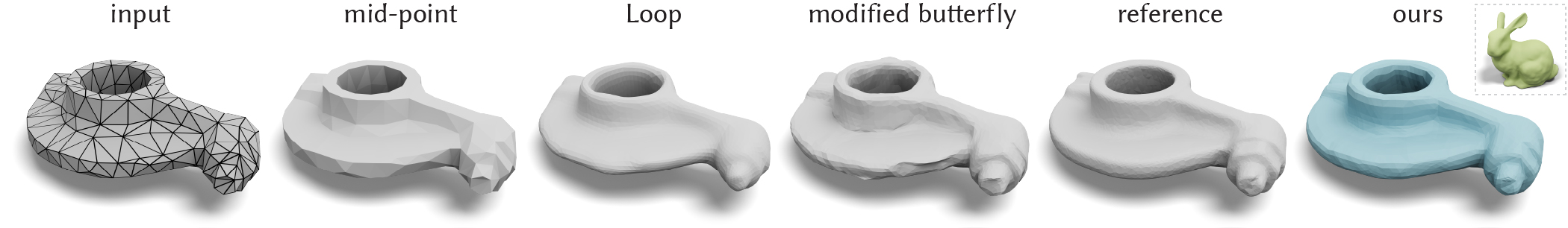

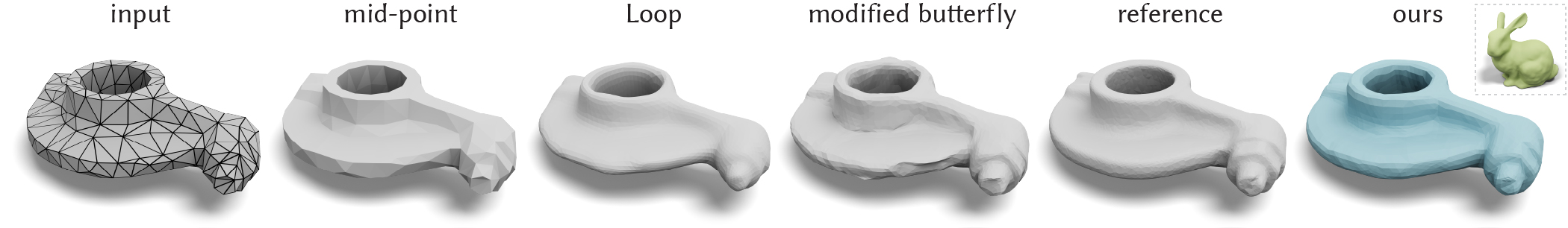

This paper introduces Neural Subdivision, a novel framework for data-driven coarse-to-fine geometry modeling. During inference, our method takes a coarse triangle mesh as input and recursively subdivides it to a finer geometry by applying the fixed topological updates of Loop Subdivision, but predicting vertex positions using a neural network conditioned on the local geometry of a patch. This approach enables us to learn complex non-linear subdivision schemes, beyond simple linear averaging used in classical techniques. One of our key contributions is a novel self-supervised training setup that only requires a set of high-resolution meshes for learning network weights. For any training shape, we stochastically generate diverse low-resolution discretizations of coarse counterparts, while maintaining a bijective mapping that prescribes the exact target position of every new vertex during the subdivision process. This leads to a very efficient and accurate loss function for conditional mesh generation, and enables us to train a method that generalizes across discretizations and favors preserving the manifold structure of the output. During training we optimize for the same set of network weights across all local mesh patches, thus providing an architecture that is not constrained to a specific input mesh, fixed genus, or category. Our network encodes patch geometry in a local frame in a rotation- and translation-invariant manner. Jointly, these design choices enable our method to generalize well, and we demonstrate that even when trained on a single high-resolution mesh our method generates reasonable subdivisions for novel shapes.

@article{Liu:Subdivision:2020,

title = {Neural Subdivision},

author = {Hsueh-Ti Derek Liu and Vladimir G. Kim and Siddhartha Chaudhuri and Noam Aigerman

and Alec Jacobson},

year = {2020},

issue_date = {July 2020},

publisher = {Association for Computing Machinery},

volume = {39},

number = {4},

issn = {0730-0301},

journal = {ACM Trans. Graph.},

}

Our research is funded in part by New Frontiers of Research Fund (NFRFE–201), the Ontario Early Research Award program, NSERC Discovery (RGPIN2017–05235, RGPAS–2017–507938), the Canada Research Chairs Program, the Fields Centre for Quantitative Analysis and Modelling and gifts by Adobe Systems, Autodesk and MESH Inc. We thank members of Dynamic Graphics Project at the University of Toronto; Thomas Davies, Oded Stein, Michael Tao, and Jackson Wang for early discussions; Rahul Arora, Seungbae Bang, Jiannan Li, Abhishek Madan, and Silvia Sellán for experiments and generating test data; Honglin Chen, Eitan Grinspun, and Sarah Kushner for proofreading. We thank Mirela Ben-Chen for the en- lightening advice on the experiments and the writing; Yifan Wang for running comparisons; and Ahmad Nasikun for evaluations. We obtained our test models from Thingi10K and we thank all the artists for sharing a rich variety of 3D models. We especially thank John Hancock for the IT support which helped us smoothly conduct this research.