Genetic Triangle Portrait

This was a project that I did for fun! The goal of it was to see if I could take a headshot of myself, and re-generate it using triangles through a genetic algorithm. You can see the results above.

In reality, I didn't actually end up using a pure genetic algorithm to create the above result as I wasn't satisfied with the original output. Therefore, I modified it! Let me walk you through my journey.

Overview

What is a genetic algorithm?

Simply put, a genetic algorithm is one that performs the same task over and over again, trying to get better results each time by improving on its predecessor. Each iteration (or generation) takes the results of the previous iteration to create the next set of results, and to try to improve on the previous cycle.

A genetic algorithm follows these steps:

- Create an initial population

- Evaluate/score each entity in the population to see how well it matches the end goal

- Select parents from the population to create the next generation of children

- Combine the parents in some fashion to generate a new population

- Potentially introduce a mutation

- Repeat from step 2 using the new population

My approach

I decided to write this in Unity, as I'm familiar with its graphics libraries, and I could focus on the details of the algorithm

Initial Population

This part was fairly straight forward. I decided to work with a population size of 100. For the initial population, for each entity, I created a solid background using a random color. I then generated between 2 and 10 triangles picking the x and y values for the vertices at random.

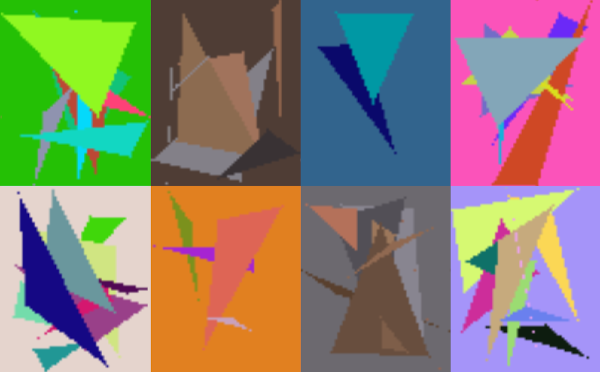

Therefore, I started with entities like these:

Evaluating / Scoring

For the purpose of scoring, I evaluated the image pixel by pixel comparing it to the source image. My first instinct was to simply see how far off each of the red, blue, and green values were; but after doing a quick bit of reading I learned that this wasn't the best way. This is because our eyes don't see red, blue, and green equally. Fortunately, the "International Commission on Illumination" developed an algorithm called DeltaE in the late 1900s that scores the difference more accurately.

Selecting Parents

Selecting parents was done by doing a lottery (or tournament), where out of the 100 entities in the population, 8 were randomly chosen (I decided to select 8 arbitrarily, but that seemed to work as well as other numbers around this value). Of those 8, the 2 that had the highest score were used to create a child for the next generation. This was done 100 times (for the next population of 100).

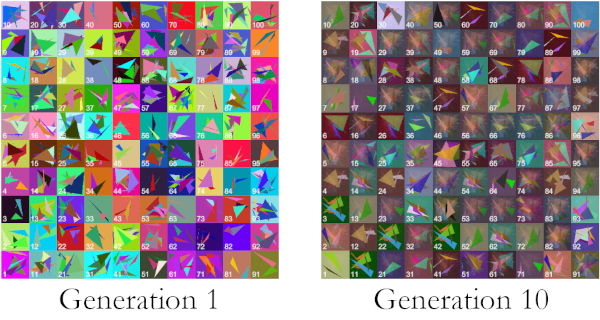

This gives a generation with a sample set like these:

Combining Parents

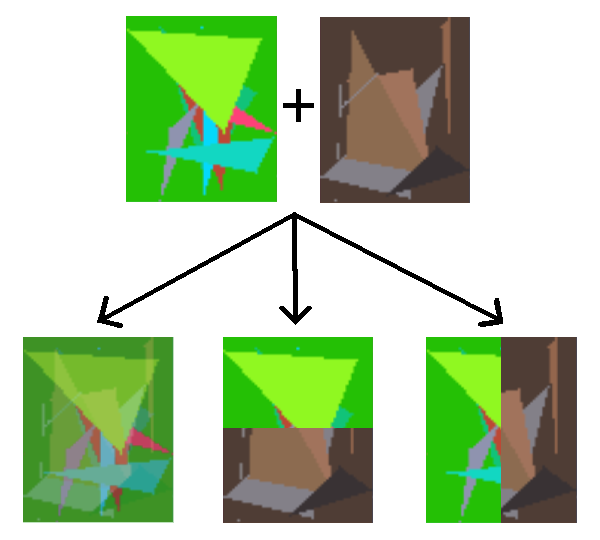

Having selected a pair of entities to act as the "parents", I needed to combine them in some way. I started off by simply changing the alpha value of the two and overlapping the images. I picked a random value (x) between 0% and 100%, applied that to the first image's alpha channel, then applied the inverse (1 - x) to the alpha channel of the second image. This worked fairly well, but had less variation than I would have liked.

After further reading, I also introduced two new ways of combining the images; one was to split the image vertically by taking a random row and using parent 1 for all the rows up to and including this row, then the rest of the rows I took from parent 2. Likewise, I did the same with the columns, splitting the image horizontally, picking a random column and using parent 1 up to and including that column, then parent 2 for the rest of the rows. This seemed to work fairly well.

Here is an example of the possibilities

Mutating

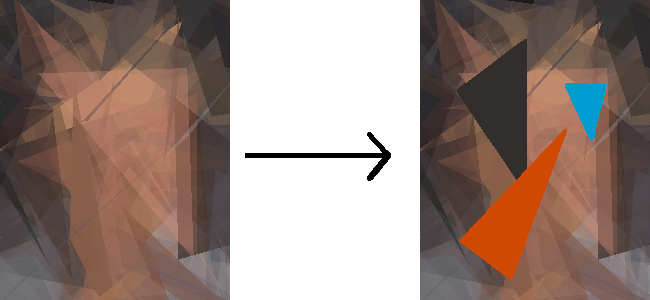

Mutations were fairly simple, they happened 20% of the time (another number chosen empirically through trial and error). I simply took the created child, and if it was to be mutated, introduced between 1 and 3 new random triangles to the image. This was then available for the next generation of images.

It would be something like this:

So, now that that's all out of the way, let's look at what actually happened...

My first attempt

So, fundamentally it's not very difficult; however, it took me a few attempts to get what I was looking for. The first attempt provided me with this...

I started with this:

But I ended up with this:

If you squint your eyes...you can kind of see a head shaped apparition in the middle, but I think that was more due to the fact that all the vertices were inside the frame, so there was a bias towards the middle.

Back to the drawing board...

After a few more attempts, finding and removing several bugs, and refining what the process was doing, it started to look a bit better.

This is the result on the 5th attempt after 5000 generations:

Better, but still room for improvement...

I was still missing the detail I wanted, but you can actually see it coming together.

Changing the algorithm...

At this point, I was starting to get what I wanted, but noticed that the images would very quickly converge into an approximation of the end goal, and then it would change very little thereafter.

Thinking about the problem, I realized several things:

- After the images had converged, the system was simply waiting for a mutation that improved the score

- That colors that were outside of the color palette of the image were simply discarded (a wasted generation)

- It was possible to start getting detail in an area, but then lose the detail due to a large triangle being placed over the area

So, I decided to modify the algorithm a bit.

- I took the color palette of the original image, and used that as my source of possible colors

- I decided to stop taking two parents and generating a child from them. I simply took the current image, introduced a mutation, and then checked to see if the mutation ended with better results than the existing image.

- I had the triangles get smaller over the time the image was evolving.

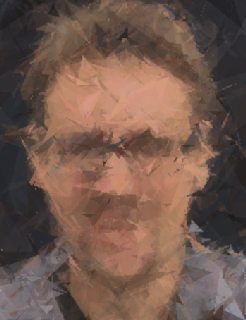

A few more attempts, a bit more debugging, and some more tweaking resulted in the following images. Since I was only mutating the image, I was able to plow through generations much faster. As a result, I upped the number of generations I performed. The following image was created after 25000 generations:

Which I was happy with.

The Final Results

Here is a video of an evaolution using the final process

Since this achieved what I was looking for, I put the project aside...for now.