hidden

-

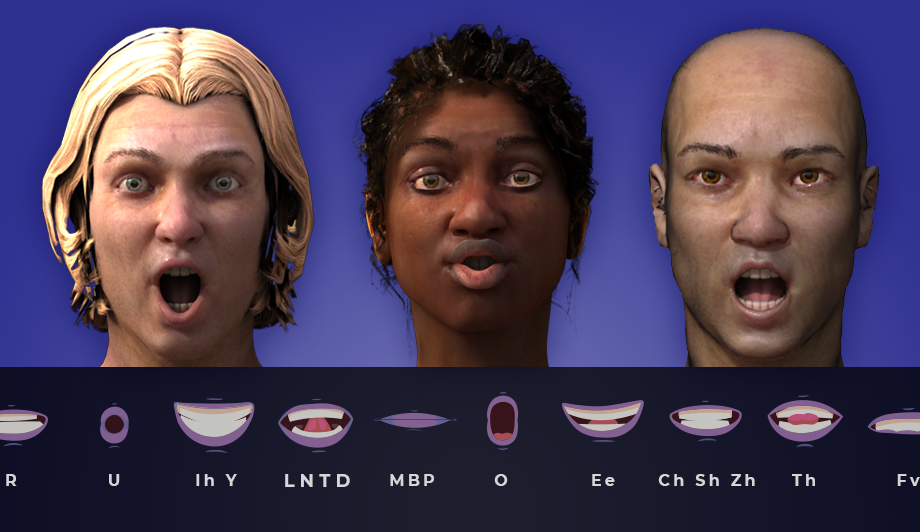

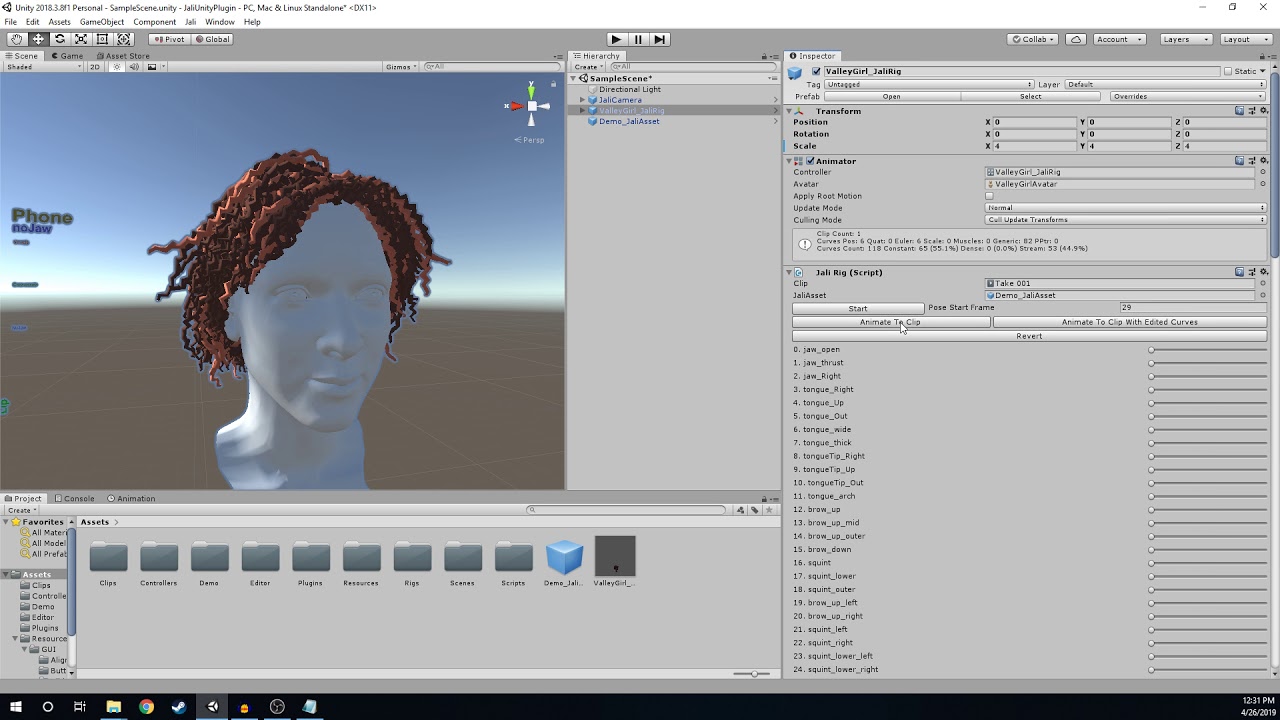

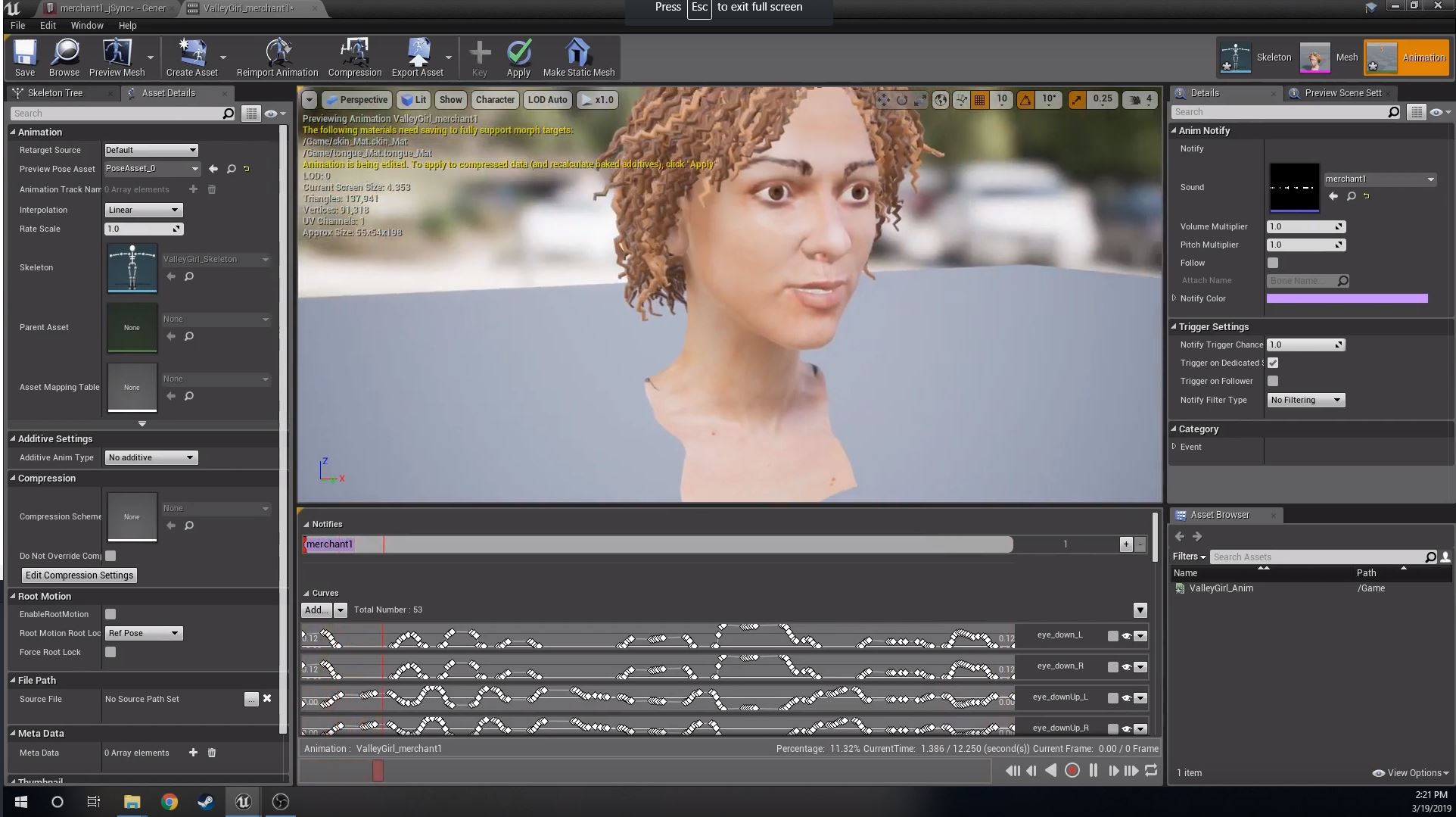

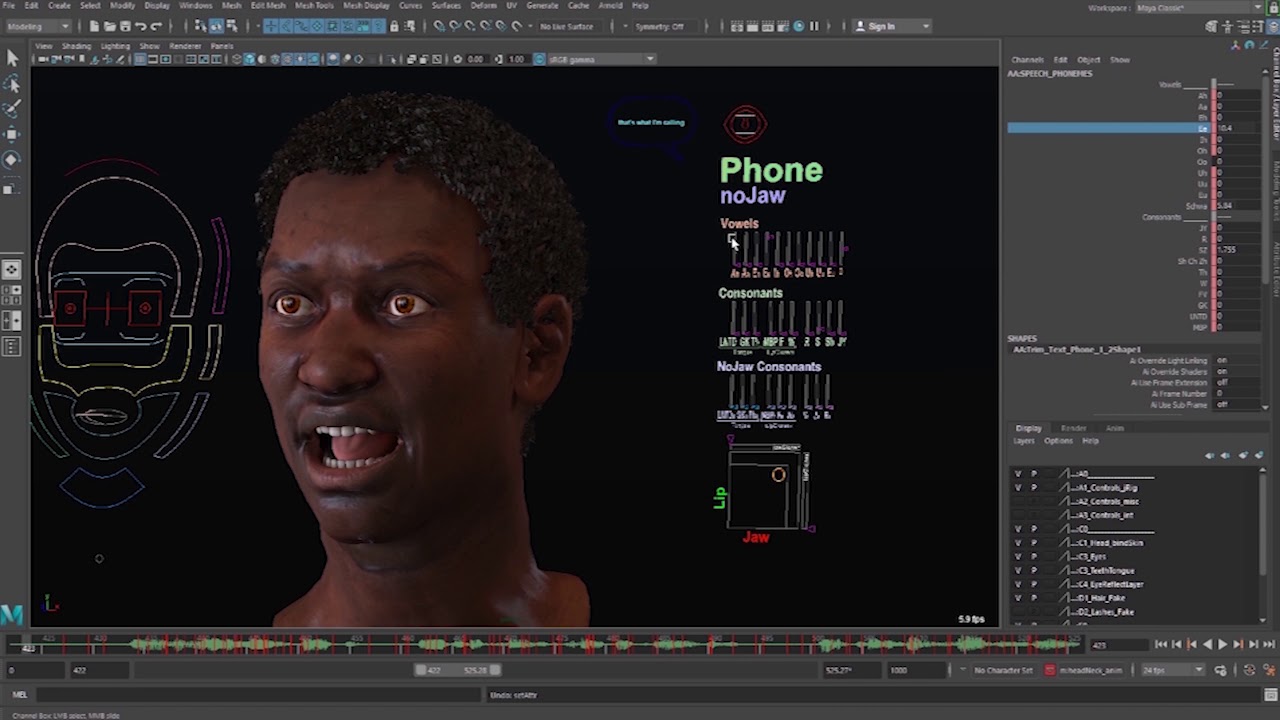

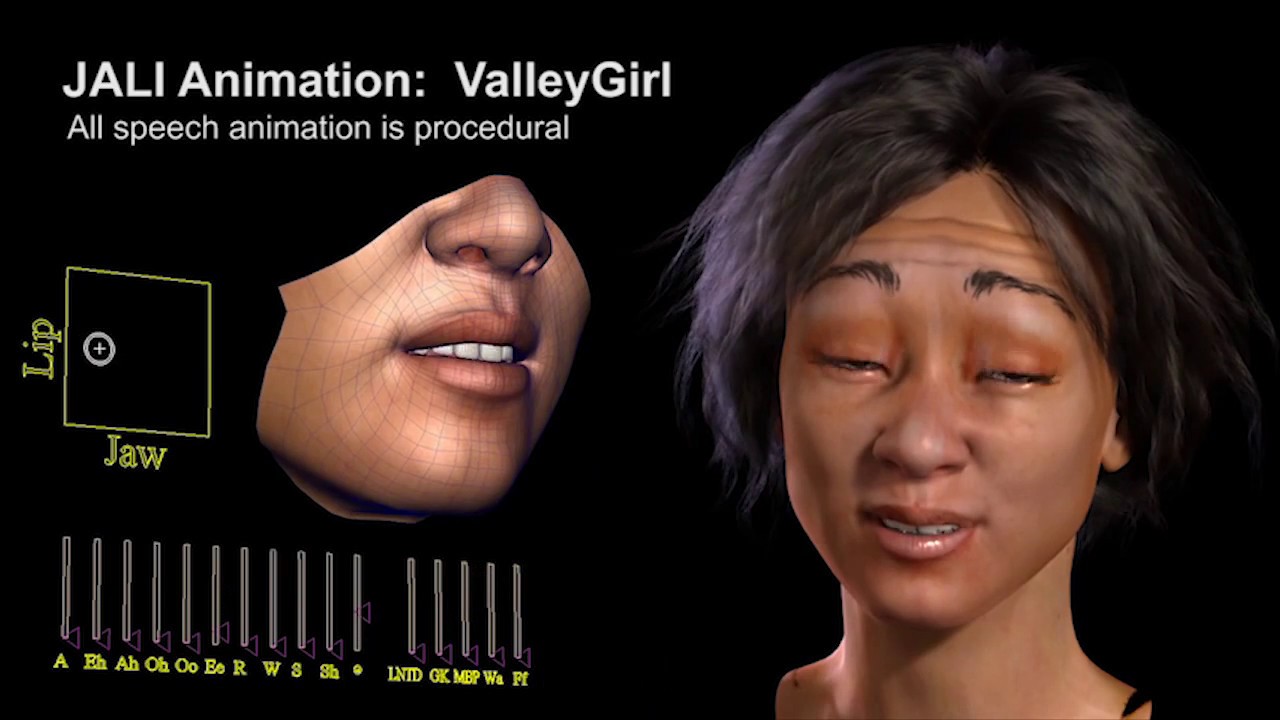

Changing the face of

Changing the face of

realistic and expressive

speech and 3D character

animationSpeak Up.