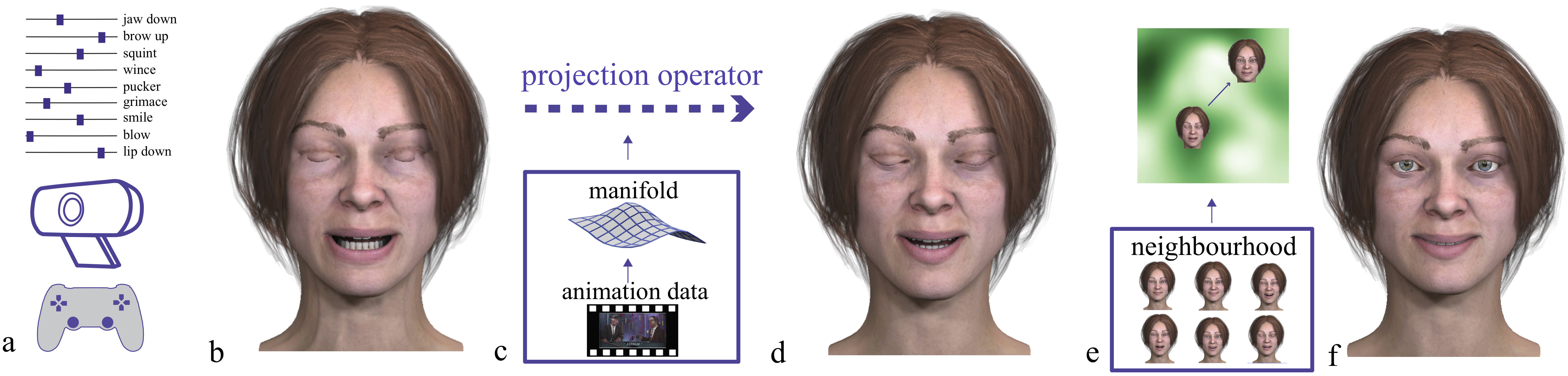

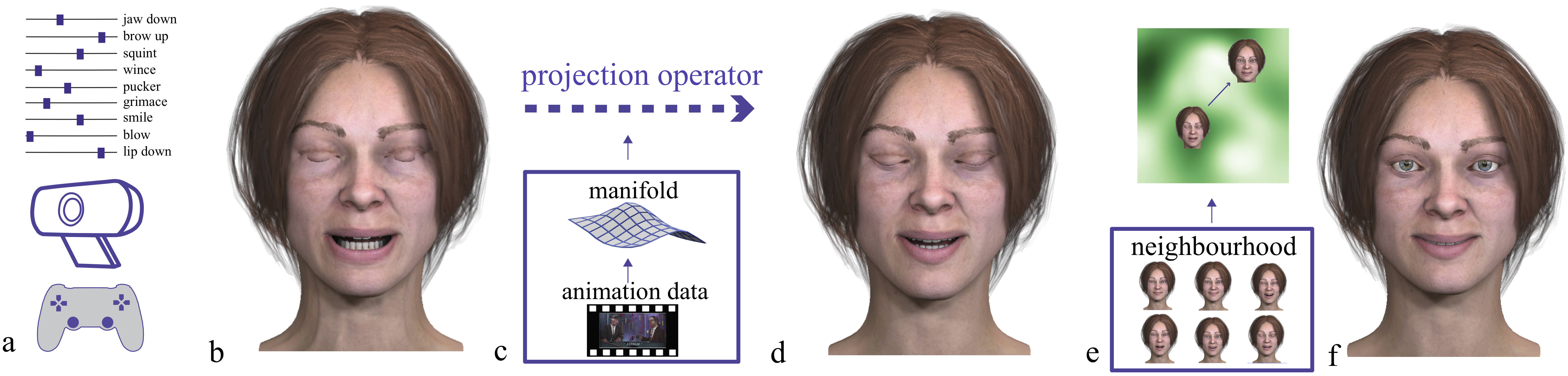

Posing expressive 3D faces is extremely challenging. Typical facial rigs have upwards of 30 controllable parameters, that while anatomically meaningful, are hard to use due to redundancy of expression, unrealistic configurations, and many semantic and stylistic correlations between the parameters. We propose a novel interface for rapid exploration and refinement of static facial expressions, based on a data-driven face manifold of natural expressions. Rapidly explored face configurations are interactively projected onto this manifold of meaningful expressions. These expressions can then be refined using a 2D embedding of nearby faces, both on and off the manifold. Our validation is fourfold: we show expressive face creation using various devices; we verify that our learnt manifold transcends its training face, to expressively control very different faces; we perform a crowd-sourced study to evaluate the quality of manifold face expressions; and we report on a usability study that shows our approach is an effective interactive tool to author facial expression.

This work is funded in part by NSERC CRD Grant (508328). We wish to thank Chris Landreth and JALI Research Inc. for providing the data as well as facial rigs, the participants for their time and reviewers for their valuable feedback which helped improve this work.