Depth from Defocus In the Wild | Overview |

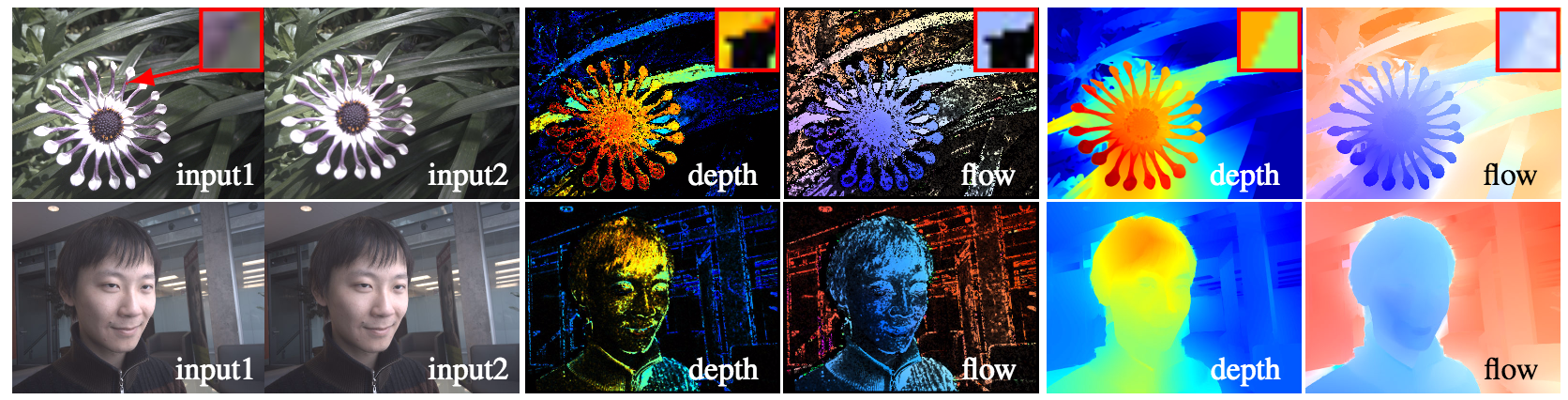

| We consider the problem of two-frame depth from defocus in conditions unsuitable for existing methods yet typical of everyday photography: a non-stationary scene, a handheld cellphone camera, a small aperture, and sparse scene texture. The key idea of our approach is to combine local estimation of depth and flow in very small patches with a global analysis of image content---3D surfaces, deformations, figure-ground relations, textures. To enable local estimation we (1) derive novel defocus-equalization filters that induce brightness constancy across frames and (2) impose a tight upper bound on defocus blur---just three pixels in radius---by appropriately refocusing the camera for the second input frame. For global analysis we use a novel piecewise-smooth scene representation that can propagate depth and flow across large irregularly-shaped regions. Our experiments show that this combination preserves sharp boundaries and yields good depth and flow maps in the face of significant noise, non-rigidity, and data sparsity. |

| People |

| Huixuan Tang (University of Toronto) |

| Scott Cohen (Adobe Research) |

| Brian Price (Adobe Research) |

| Stephen Schiller (Adobe Research) |

| Kiriakos N. Kutulakos (University of Toronto) |

| Related Publication |

| Huixuan Tang, Scott Cohen, Brian Price, Stephen Schiller and Kiriakos N. Kutulakos, Depth from defocus in the wild. Proc. IEEE Computer Vision and Pattern Recognition Conference, 2017. PDF (9.1MB) |

| Supplementary Materials |

|

Huixuan Tang,

Scott Cohen,

Brian Price,

Stephen Schiller

and

Kiriakos N. Kutulakos,

Depth from defocus in the wild: Supplementary document. Proc. IEEE Computer

Vision and Pattern Recognition Conference, 2017.

PDF

(3.4MB) Huixuan Tang, Scott Cohen, Brian Price, Stephen Schiller and Kiriakos N. Kutulakos, Depth from defocus in the wild: Additional results and comparison Webpage Code coming soon. |

| Acknowledgements |

| The support of Adobe Inc. and of the Natural Sci-ences and Engineering Research Council of Canada (NSERC) under the RPGIN and SPG programs are gratefully acknowledged. |